Abstract:

Spectral Select explores the spectral content of one sample and the amplitude curve of a second sample and unites them in a new musical context. The meditative character of the output created by iteration is both contrasted and structured by louder amplitude peaks.

In a revised version, Spectral Select was spatialized in Ambisonics HOA-5 format.

Supervisor: Prof. Dr. Marlon Schumacher

A study by: Anselm Weber

Winter semester 2021/22

University of Music, Karlsruhe

About the study:

In which forms of expression is the connection between frequency and amplitude expressed ? Are both areas intrinsically connected and if so, what could be approaches to redesigning this order?

Such questions have occupied me for some time. That’s why the attempt to redesign them is the core topic of Spectral Select.

I was inspired by AudioSculpt from IRCAM, which we got to know in our course: “Symbolic Sound Processing and Analysis/Synthesis” together with Prof. Dr. Marlon Schumacher and Brandon L. Snyder and which we partially rebuilt.

Spectral Edit works on a similar principle, but instead of having a user work out interesting areas within a spectrum of a sample, it was decided to use a second audio sample. This additional sample (from now on referred to as “amplitude sound” in the course of this article) determines how the first sample (from now on referred to as “spectral sound”) is to be processed by OM-Sox.

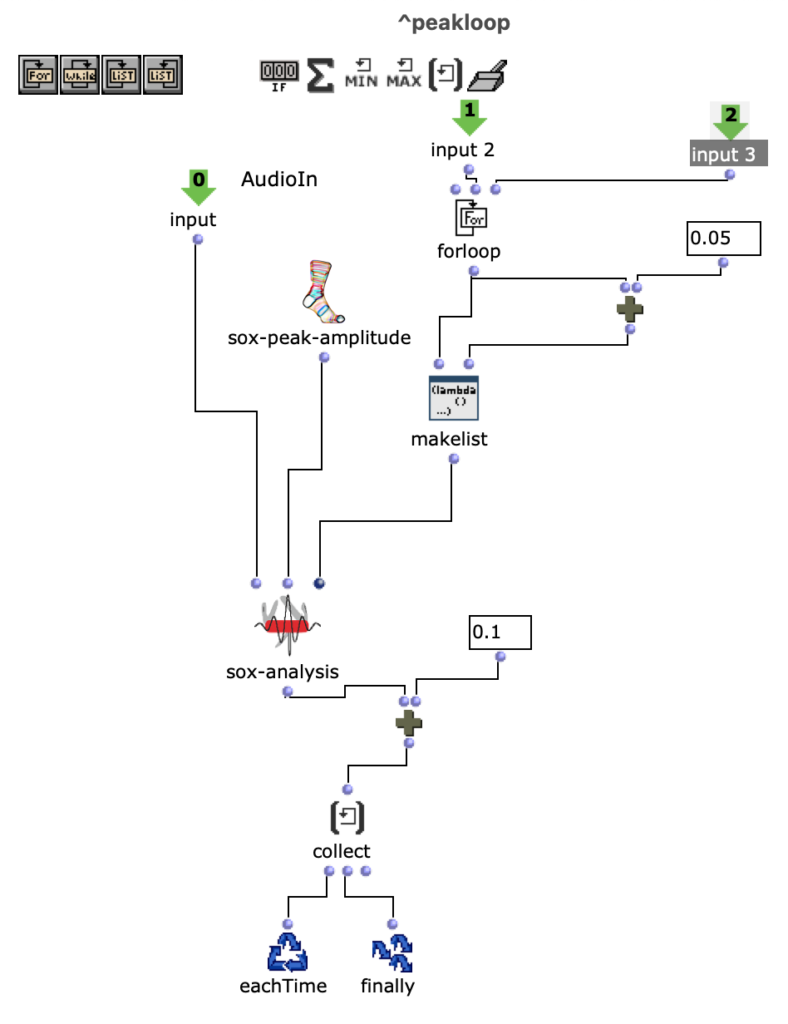

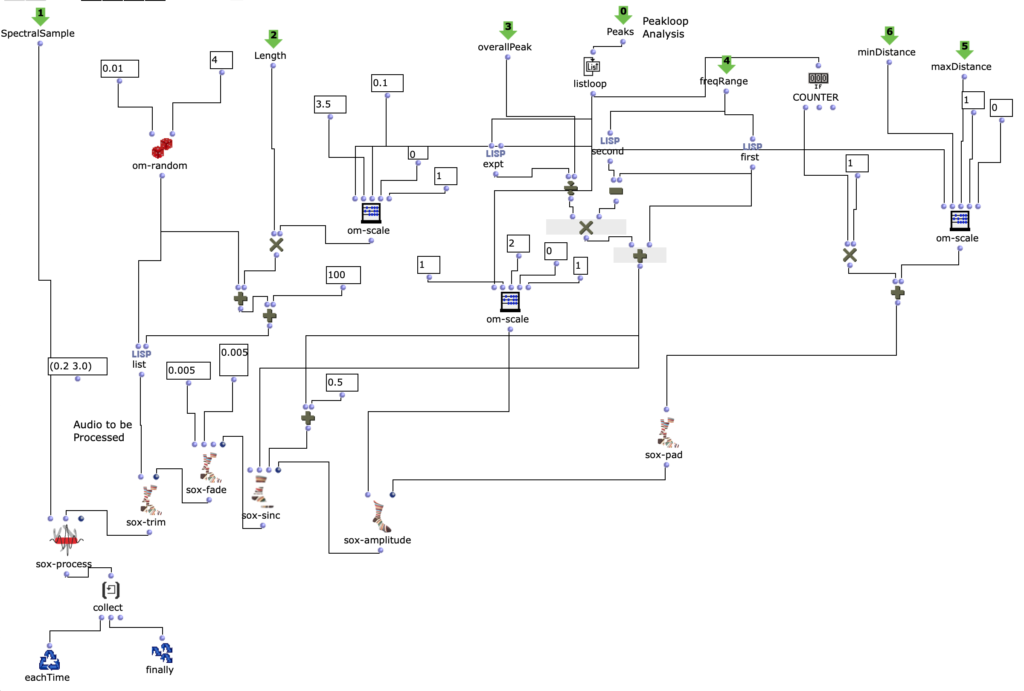

To achieve this, two loops are used:

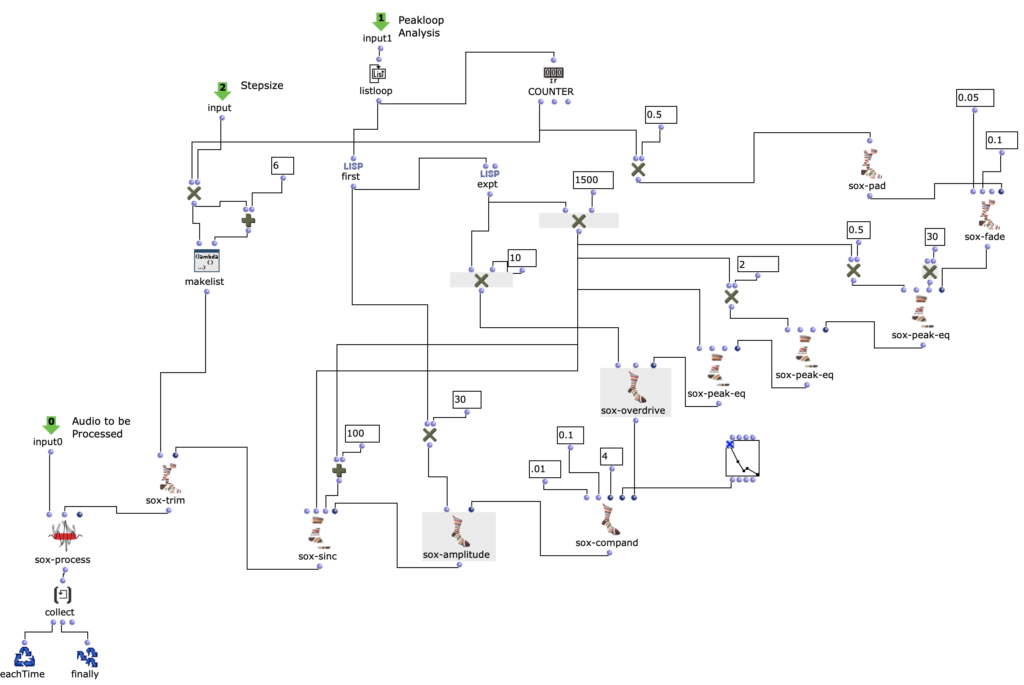

First, individual amplitude peaks are analyzed out of the amplitude sound in the first “peakloop”. This analysis is then used in the heart of the patch, the “choosefreq” loop, to select interesting sub-ranges from the spectral sample. Loud peaks filter narrower bands from higher frequency ranges and form a contrast to weaker peaks, which filter somewhat broader bands from lower frequency ranges.

How small the respective iteration steps are affects both the length and the resolution of the overall output. Depending on the sample material, a large number of short grains or fewer but longer subsections can be created. However, both of these parameters can be selected freely and independently of each other.

In the enclosed piece, for example, a relatively high resolution (i.e. an increased number of iteration steps) was chosen in combination with a longer duration of the cut sample. This creates a rather meditative character, whereby no two sections will be 100% identical, as there are constantly minimal changes under the peak amplitudes of the amplitude sound.

The still relatively raw result of this algorithm is the first version of my acousmatic study.

The subsequent revision step was primarily aimed at working out the differences between the individual iteration steps more precisely. For this purpose, a series of effects were used, which in turn behave differently depending on the peak amplitude of the amplitude sound. To make this possible, the series of effects was integrated directly into the peak loop.

In the third and final revision step, the audio was spatialized to 8 channels.

The individual channels sound into each other and change their position in a clockwise direction. This means that the basic character of the piece remains the same, but it is now also possible to follow the “working through” of the choosefreq loop spatially. To maintain this spatiality, the output was then converted to binaural stereo for the upload using Binauralix.

Spectral Select – Ambisonics

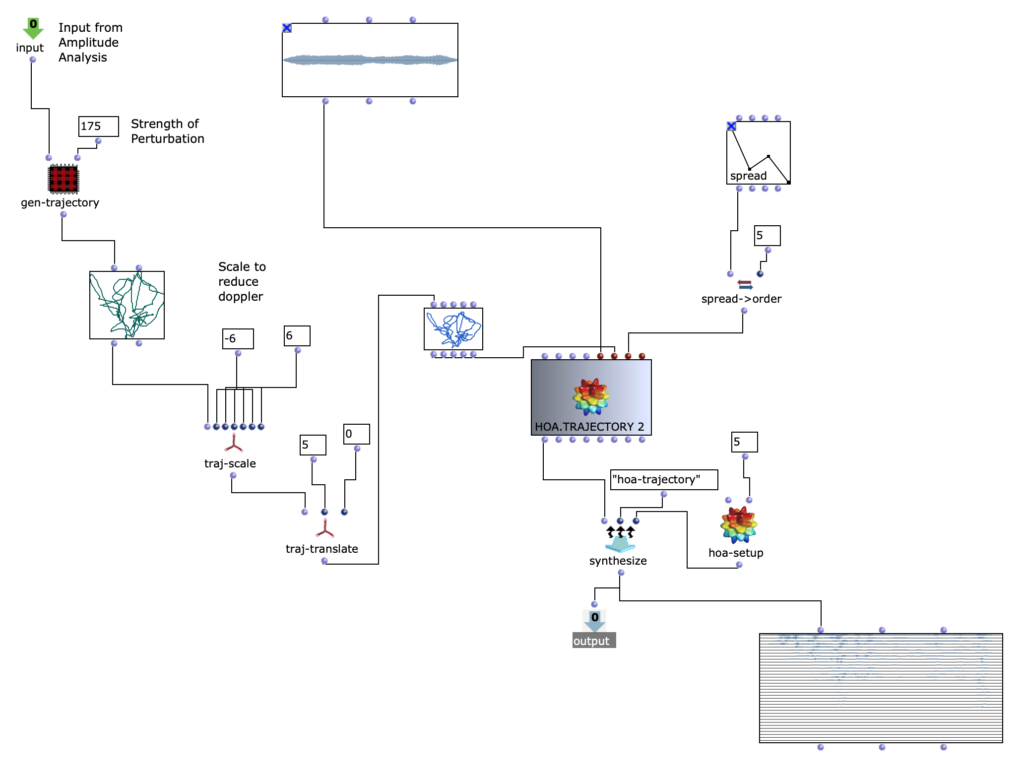

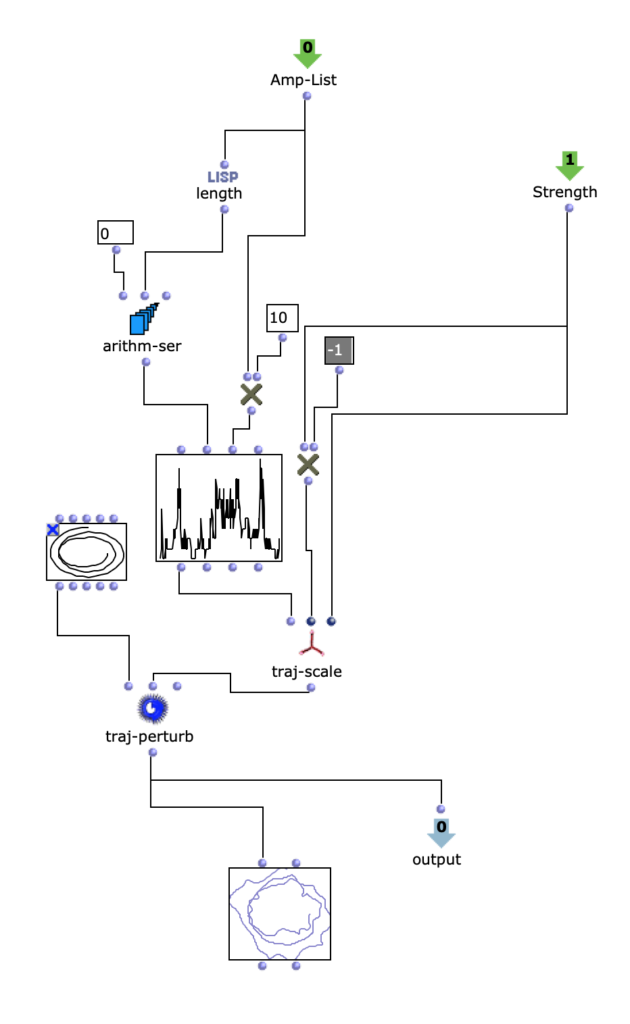

In the course of a further revision, Spectral Select was re-spatialized using the spatialization class “Hoa-Trajectory” from OM-Prisma and converted to the Ambisonics format.

To ensure that this step fits in well conceptually and sonically with the previous edits, the amplitude sound should also play an important role in the spatial position.

The possibilities for spatializing sounds with the help of Open Music and OM Prism are numerous. In the end, it was decided to work with Hoa-Trajectory. Here, the sound source is not bound to a fixed position in space and can be described with a trajectory that is scaled to the total duration of the audio input.

The trajectory is created depending on the amplitude analysis in the previous step.

A simple, three-dimensional circular movement, which spirals downwards, is perturbed with a more complex, two-dimensional curve. The Y-values of the more complex curve correspond to the analyzed amplitude values of the amplitude sound.

Depending on the scaling of the amplitude curve, this results in more or less pronounced deviations in the circular motion. Higher amplitude values therefore ensure more extensive movements in space.

It is interesting to note that OM-Prisma also takes Doppler effects into account. As a result, it is also audible that at higher amplitude values, more extreme distances to the listening position are covered in the same time. This step therefore has a direct influence on the timbre of the entire piece.

Depending on the scaling of the trajectory, fast movements can be strongly overemphasized, but artifacts can also occur (if the distance is too great).

To get a better impression, 2 different runs of the algorithm with different distances to the listener follow.

Versionwith closer distance and more moderate Dopp ler effects – Binaural Stereo

In contrast to the previous sound examples, the spectral sound and amplitude sound have been replaced in this example. This is a longer sound file for analyzing the amplitudes and a less distorted drone as a spectral sound.

The idea behind this project is to experiment with different sound files anyway.

Therefore, the old algorithm has been reworked to offer more flexibility with different sound files:

In addition, a randomized selection is now made from the spectral sound on the time axis. As a result, any shaping context should come from the magnitude of the amplitude sound and any timbre should be extracted from the spectral sound.