Marlon Schumacher will serve as music and installation co-chair together with Esther Fee Feichtner for the IEEE

5th International Symposium on the Internet of Sounds

held at the International Audio Laboratories Erlangen, from 30 September – 2 October 2024. Follow this link to the official IEEE Website:

“The Internet of Sounds is an emerging research field at the intersection of the Sound and Music Computing and the Internet of Things domains. […] The aim is to bring together academics and industry to investigate and advance the development of Internet of Sounds technologies by using novel tools and processes. The event will consist of presentations, keynotes, panels, poster presentations, demonstrations, tutorials, music performances, and installations.”

The Internet of Sounds Research Network is supported by an impressive number (> 120) of institutions from over 20 countries, with a dedicated IEEE committee for emerging technology initiatives. Partners from Germany include:

-

-

International Audio Laboratories Erlangen, a joint institution of the Friedrich-Alexander-Universität Erlangen-Nürnberg and Fraunhofer IIS. [Main contact person].

-

Technical University Dresden, Institute of Communication Technology. [Main contact person].

-

Leibniz University Hannover, Institute of Communication Technology. [Main contact person].

-

Sennheiser. [Main contact person].

-

Fraunhofer Institute for Digital Media Technology (IDMT), Industrial Media Application group. [Main contact person].

-

Berlin University of the Arts, Sound Studies and Sonic Arts. [Main contact person].

-

Hamburg University of Music and Drama, LIGETI Center. [Hochschule Anhalt University of Applied Sciences. [Main contact person].

-

Ostfalia University of Applied Sciences, IoT / IIoT Research Group. [Main contact person].

-

Berlin University of Applied Sciences, School of Popular Arts. [Main contact person].

-

Soundjack. [Main contact person].

-

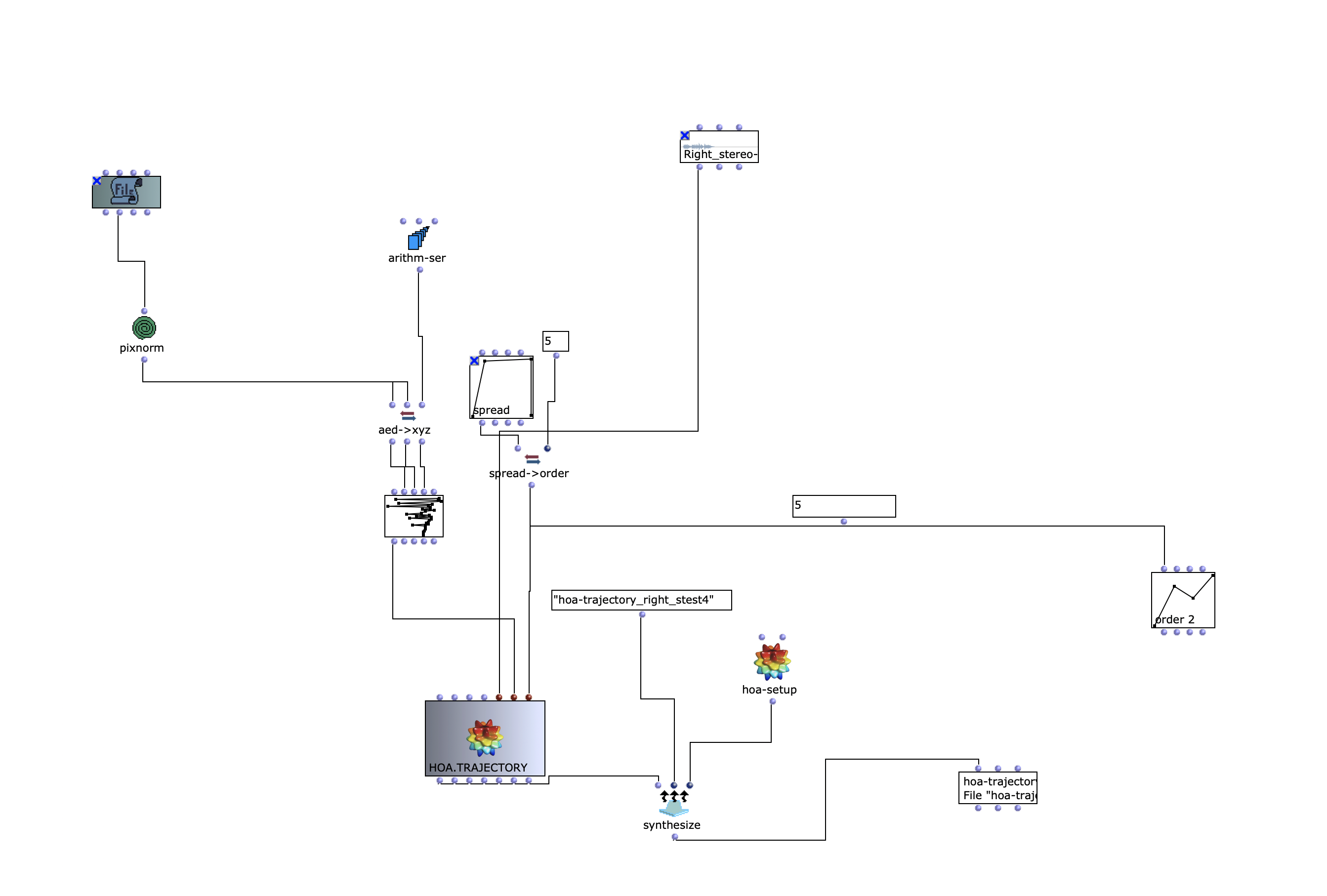

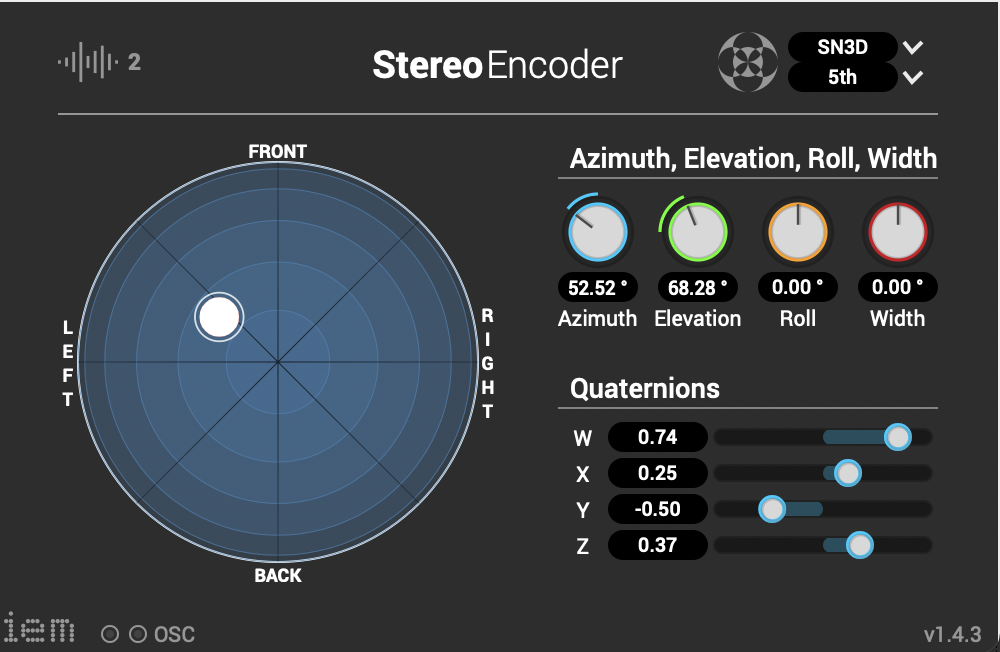

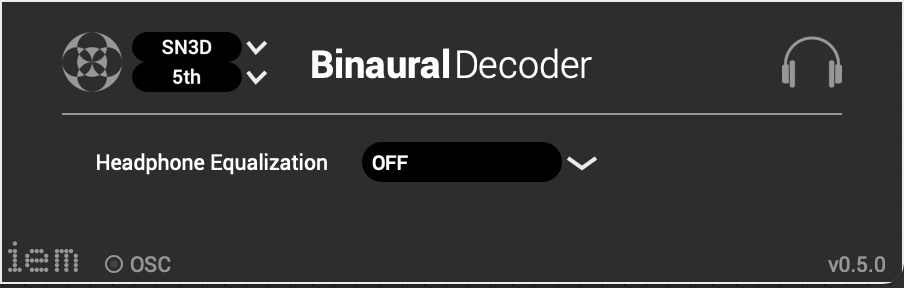

The stereo and mono files were first encoded in 5th order Ambisonics (36 channels) and then converted into two channels using the binaural encoder.

The stereo and mono files were first encoded in 5th order Ambisonics (36 channels) and then converted into two channels using the binaural encoder.

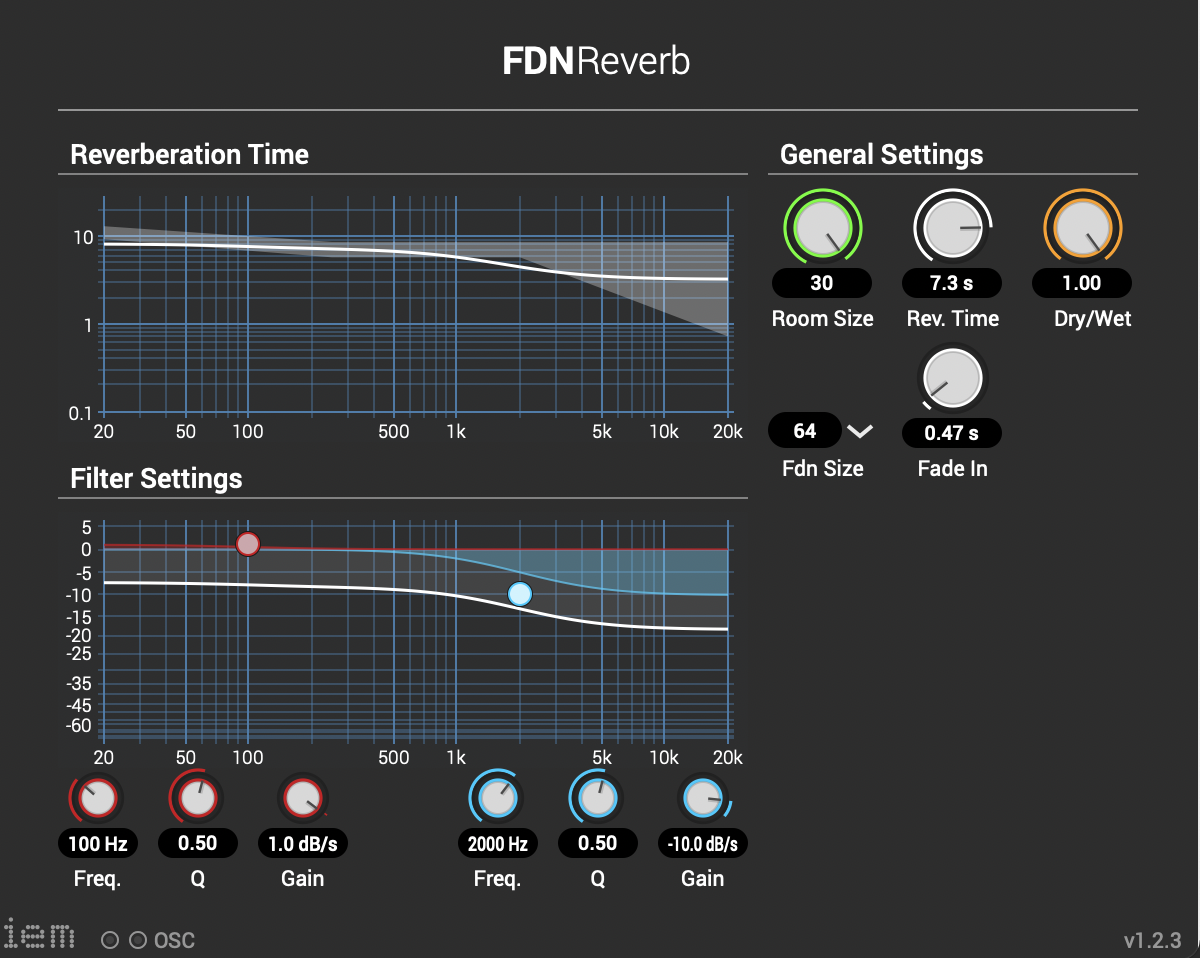

Other post-processing effects (Detune, Reverb) were programmed by myself and are available on Github. The reverb is based on a paper by James A. Moorer About this Reverberation Business from 1979 and was written in C. The algorithm of the detuner was written in C from the HTML version of Miller Puckette’s handbook “The Theory and Technique of Electronic Music”. The result of the last iteration can be heard here.

Other post-processing effects (Detune, Reverb) were programmed by myself and are available on Github. The reverb is based on a paper by James A. Moorer About this Reverberation Business from 1979 and was written in C. The algorithm of the detuner was written in C from the HTML version of Miller Puckette’s handbook “The Theory and Technique of Electronic Music”. The result of the last iteration can be heard here.