In this comprehensive article, I will describe the creative process of my composition in order to present my experience in the course of multi-channel processing . I produced the composition as part of the seminar “Visual Programming of Space/Sound Synthesis (VPRS)” with Prof. Dr. Marlon Schumacher at the HFM Karlsruhe.

Supervisor:: Prof. Dr. Marlon Schumacher

A study by: Mila Grishkova

Summer semester 2022

University of Music, Karlsruhe

✅ The goal

The aim of my project work was to gain a complete experience with multi-channel editing.

I use 3 sounds to build composition.

Then I realize the piece in 3D Ambisonics 5th order format (36-channel audio file). I use multi-channel processing with OM-SoX Spatialization/Rendering in Ambisonics with OMPrisma, here: Dynamic Spatialization(hoa.continuous). I import the resulting audio files into a corresponding Reaper project (template is provided(see Fig 7)). Within Reaper, the Ambisonics audio tracks are processed with plug-ins.

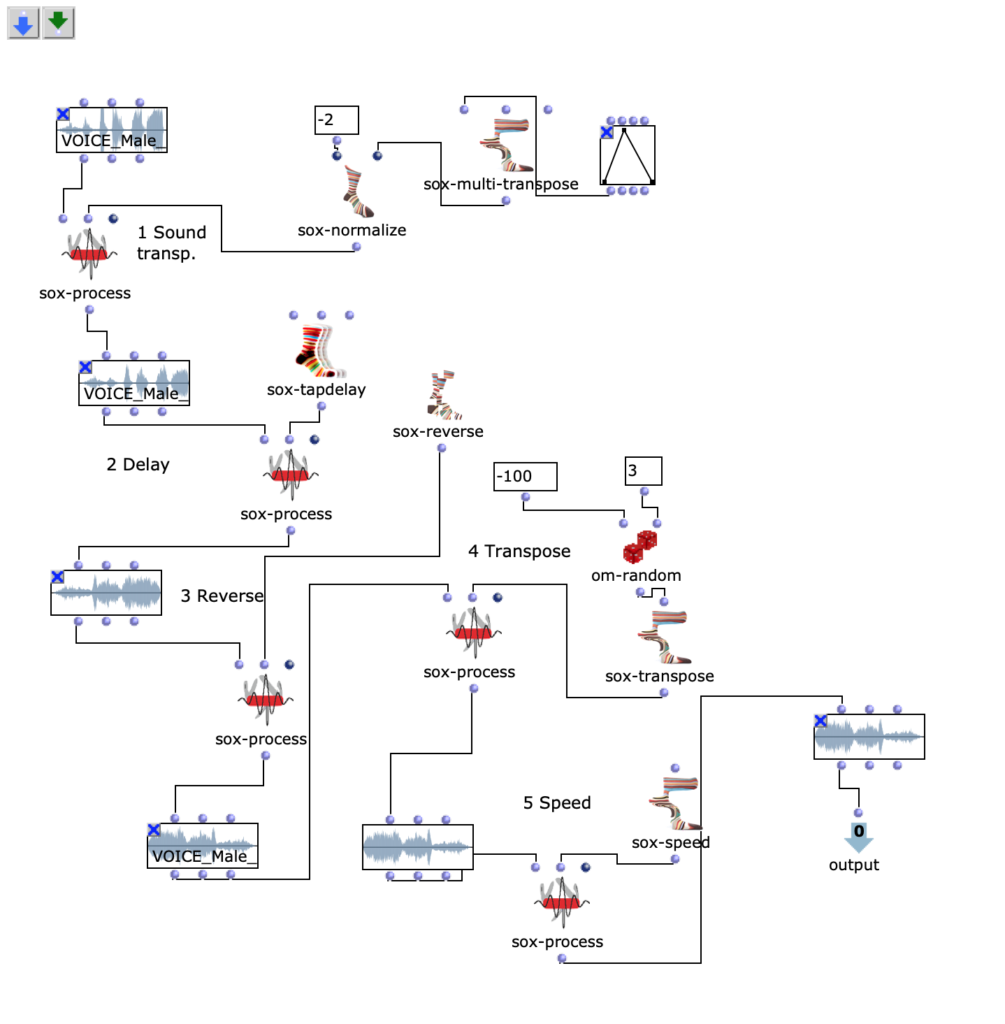

✅ 1st figure & 1st sound

In the 1st figure you can recognize an OpenMusik patch. The patch “Sound1” contains the method Sound1 for editing. I use sound-voice, then I make a transposition(OM sox-transpose, OM sox-normalize), then I use delay lines(OM sox-tapdelay). The next step is backwards (OMsox-reverse). Then you can see in patch transpose and I use the random method (OMsox-random). The last element of this patch is the speed processing (OMsox-speed).

Fg. 1 shows OpenMusic Patch Sound1: This patch shows transformation of the 1st sound

Audio I: the first sound

✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴

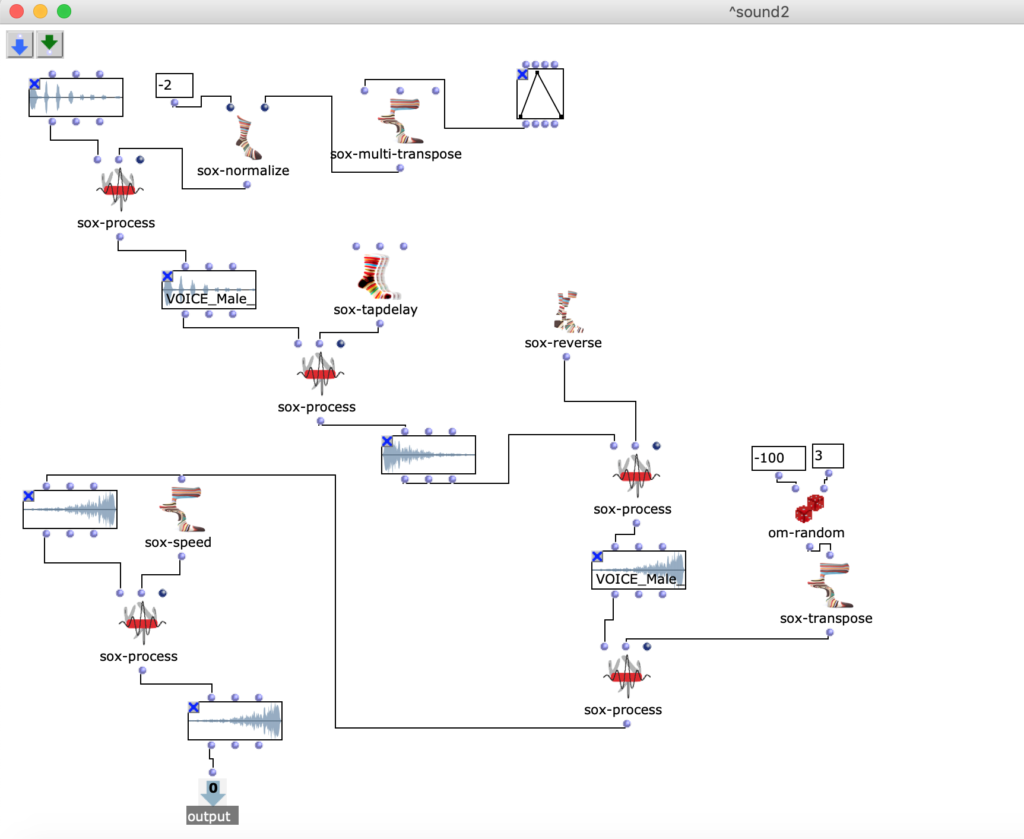

✅ 2nd figure & 2nd sound

In the 2nd figure you can see an OpenMusik patch. The patch “Sound2” contains the method“Sound2” for editing. I do the same manipulations as with the 1st sound to edit the 2nd sound. You can read the description above.

Fg. 2 shows OpenMusic Patch Sound2: This patch shows transformation of the 2nd sound

Audio II: the second sound

✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴

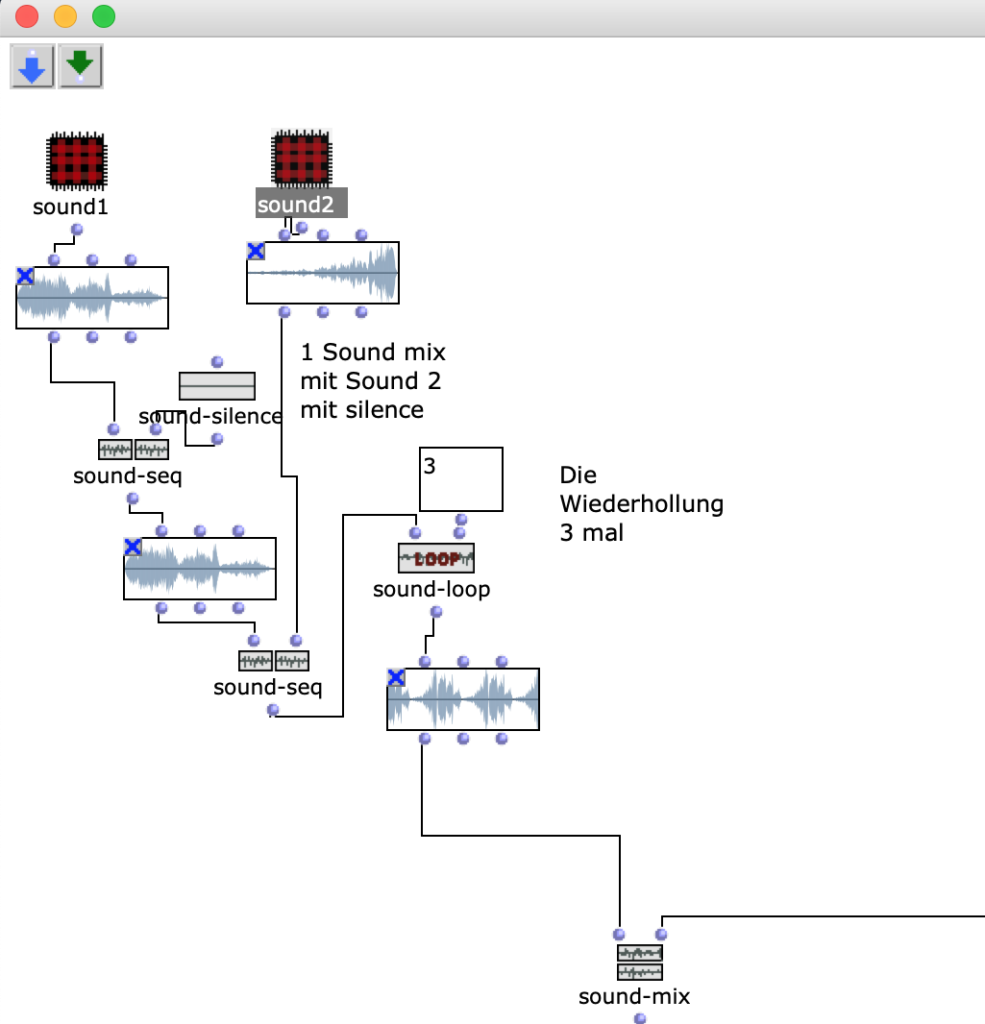

3rd figure & 3rd sound

In the 3rd figure you can see the OpenMusic patch “Mix“. This patch contains the mix process“2 sounds“(OM sound-mix), which I described in the last 2 patches. I add pauses(OM sound-silence) and then I repeat the sound material 3 times(OM sound-loop).

Fg. 3 shows OpenMusic patch “Mix”: This patch shows sound-mix (sound 1 and sound 2).

Audio III: the third sound

✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴

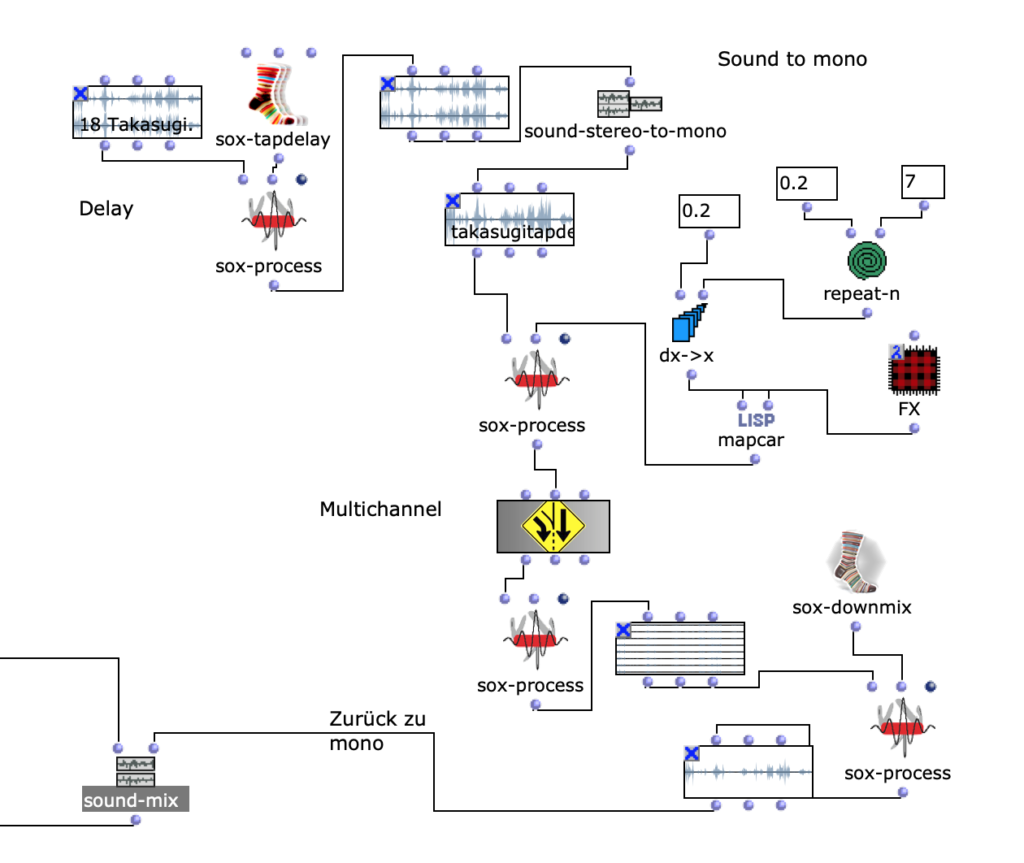

✅ 4th figure & 4th sound

The 4th figure contains patch 4, in which you can see the arrangement of Takasugi’s composition “Diary of a Lung”.

The composer Steven Kazuo Takasugi presented his music piece “Diary of a Lung, version for eighteen musicians and electronic playback” at the University of Music Karlsruhe and he was looking for 2 sound engineers who could play his composition live with several channels at the concert.

That’s why I took Takasugi’s music piece as part of my material.

Fg. 4 shows OpenMusic patch “Takasugi”: This patch shows editing of the material from the audio Takasugi “Diary of a Lung”

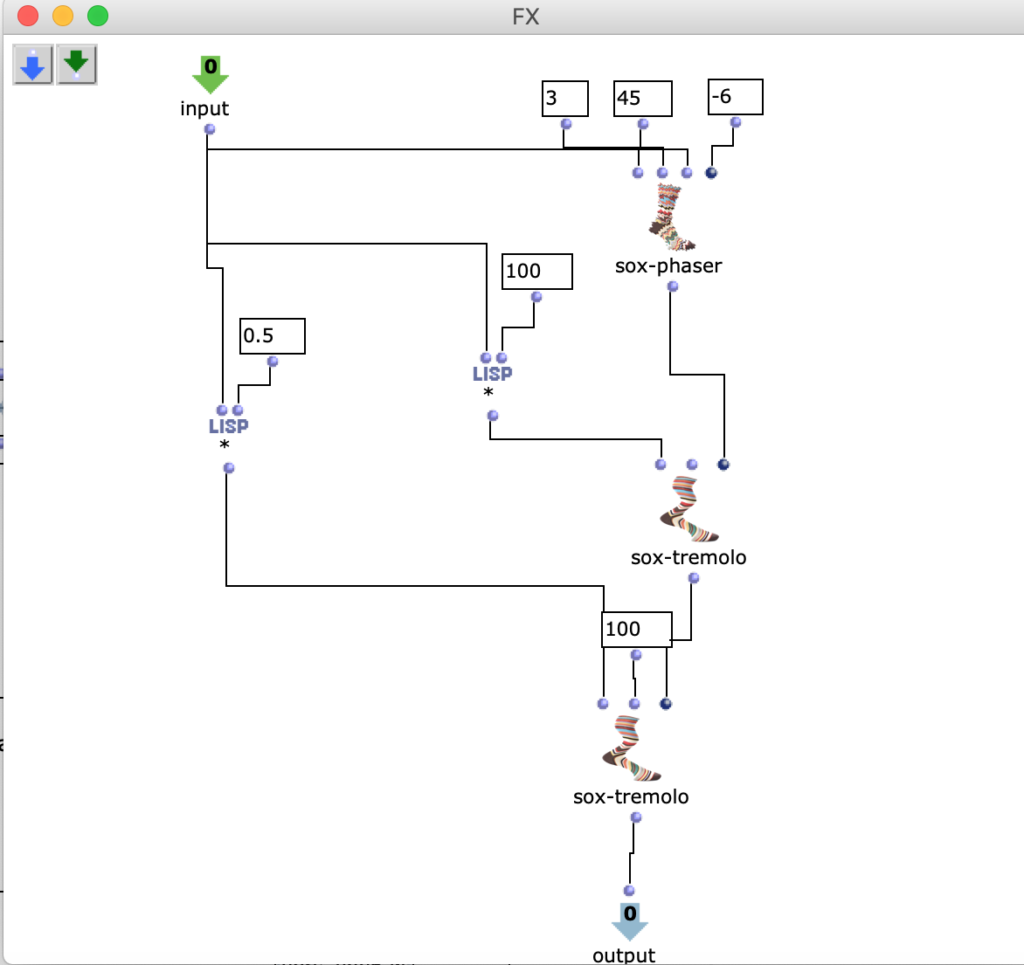

In the next figure(Fig. 5) you can see the patch“FX“.

Fg. 5 shows OpenMusic patch “FX”: This patch shows OM sox-phaser, OM sox-tremolo

Audio IV: the fourth sound

✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴

✅ 1.result

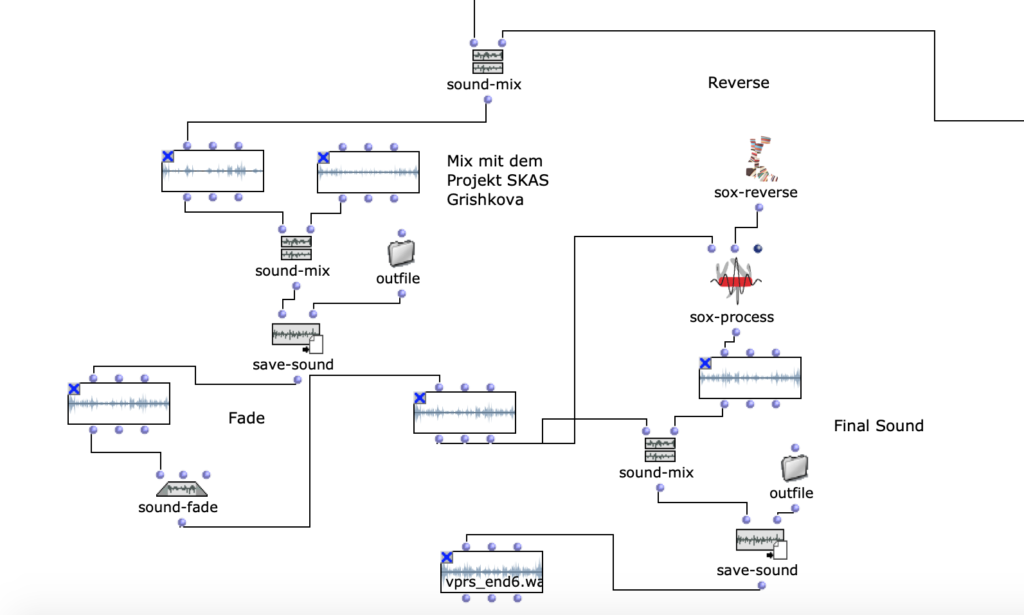

In the 6th figure you can see the final editing. I make a mix with an audio material(Mix 2 Sound Takasugi) and my project, which I realized in the context of SKAS. The description of the project can be found here.

Fg. 6 shows OpenMusic Patch “FinalMix”: This patch shows the final transformation with the mono track

The result of the last iteration can be heard here:

Audio V: the fifth sound

✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴

✅2.result (OMPrisma)

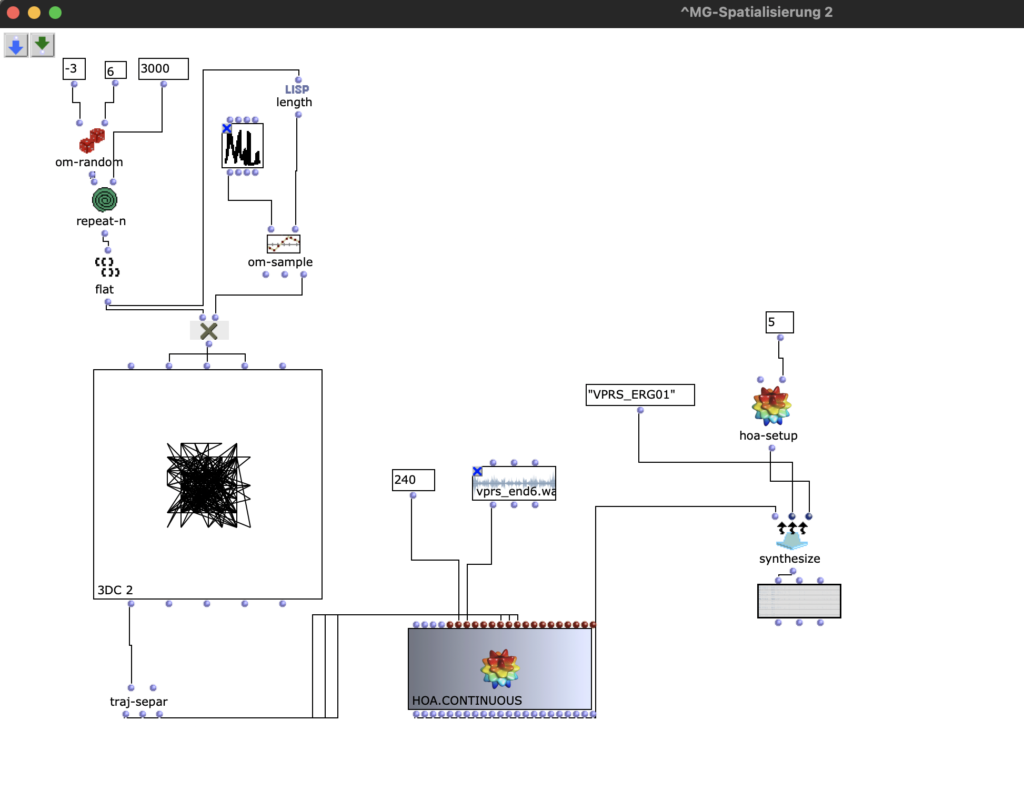

Then I edited the resulting audio files with OMPrisma(see Fig 7).

Main idea of Mr. Takasugi is that composer (and musician) must produce very personal music. You have to bring“yourself” in the composition.

The idea that you have to bring “yourself” in the composition, practically means that you have to bring some random elements. Random elements are necessary: as humans we make mistakes, we can overlook something, or decide spontaneously. I use OM-random to integrate these random elements into the composition.

Fg. 7 shows OpenMusic Patch “OMPrisma”: This patch shows the transformation with HOA.Continuous

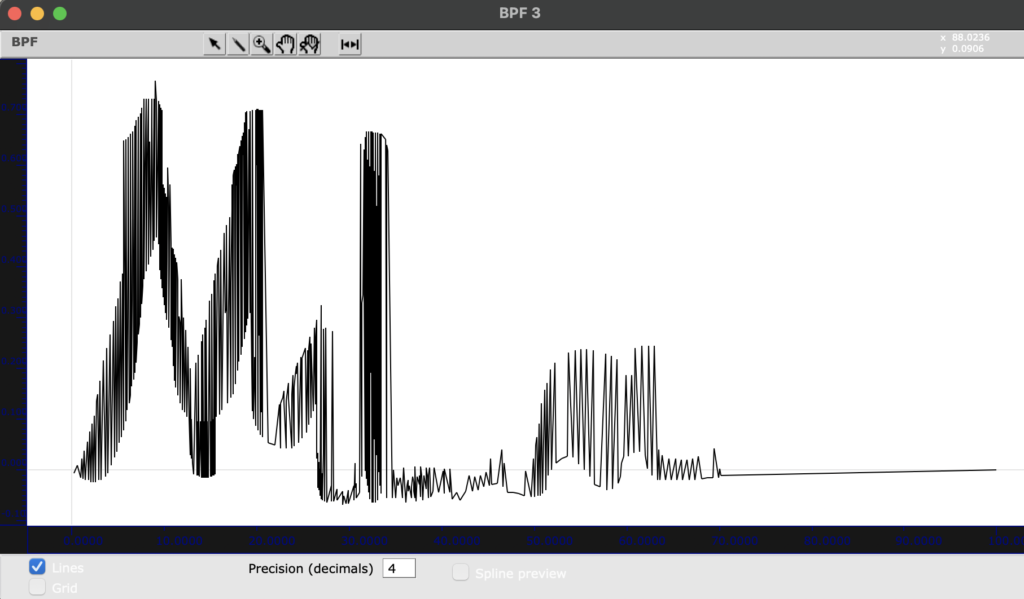

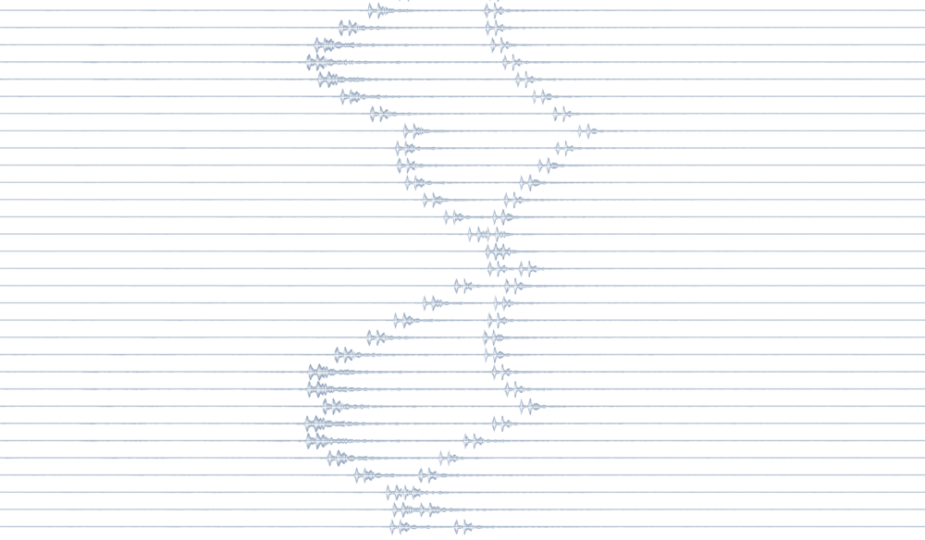

As a method to personalize composition I use my name(Mila) as a pattern for the movement(see Fig 8).

Fg. 8 shows OpenMusic object “BPF”: This object shows my name as a pattern for the transformations

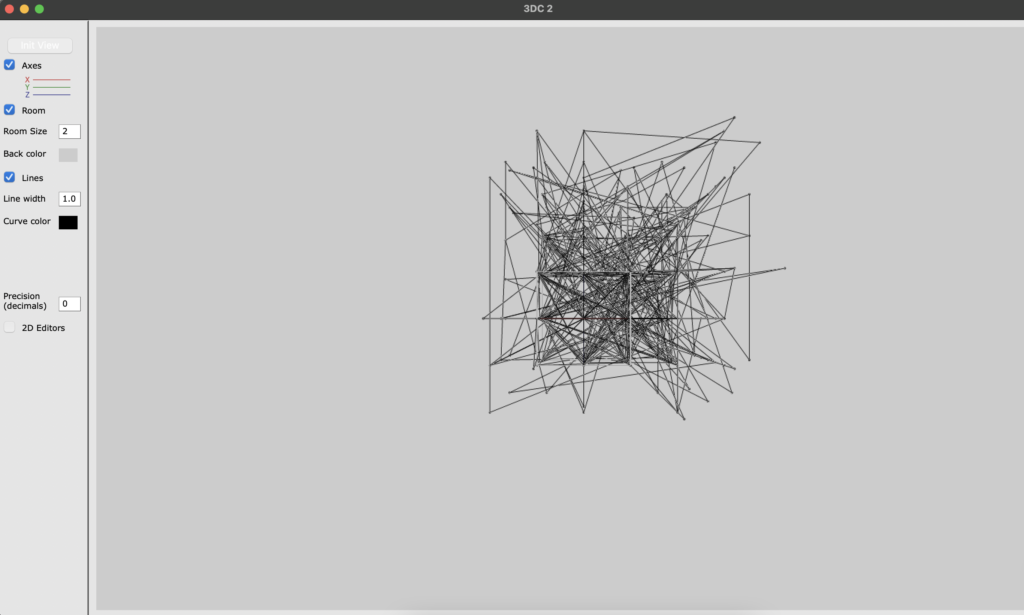

Then use 3D OpenMusic object. You can also see 3D well(see Fig. 9).

Fg. 9 shows OpenMusic object “3DC”: This object shows the transformations

With the traj-separ object you can send x-, y-, z- coordinates to HOA.Continu ous. With HOA.Continuous you can also select the length of the composition (in sec). In my case it says 240, which means 4 minutes.

Then with hoa-setup you can select which class of HOA you need. In my case, HOA-setup is 5, which means that I use High Order Ambisonic.

You can distinguish between First Order Ambisonic and High Order Ambisonic. First Order Ambisonic is a recording option for 3D sound (“First Order Ambisonic” (FOA)), which consists of four channels and can also be exported to various 2D formats (e.g. stereo, surround sound).

With Ambisonic higher order you can localize sound sources even more precisely. A more precise determination of the angle can be realized. The higher the order, the more spheres are added.

“The increasing number of components gives us higher resolution in the domain of sound directivity. The amount of B-format components in High order Ambisonics (HOA) is connected with formula for 3D Ambisonics: (? 1)2 or for 2D Ambisonics 2? 1 where n is given Ambisonics order”.(C)

✅ Result OpenMusic project with OMPrisma can be downloaded ? here? as audio.

✅ Result OpenMusic project with OMPrisma can be downloaded ? here? as code.

✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴✴

✅ Reaper

Then I imported the resulting audio files into a Reaper project and edited the Ambisonics audio tracks with plugins and made new manipulations.

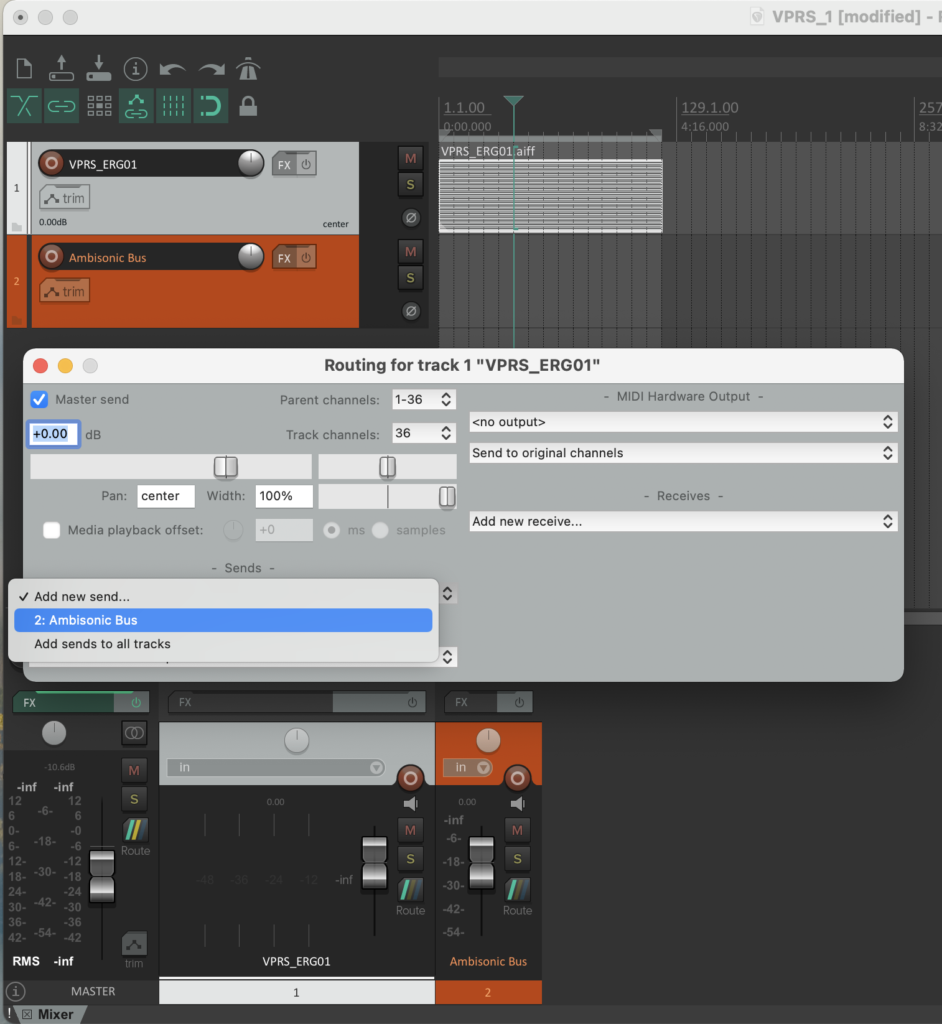

After the audio material is added to Reaper, you have to do routing. The main idea is that 36 channels are selected and sent to Ambisonic Buss (new track(see Fig 10)).

Fg. 10 shows Reaper project: step routing (36 channels), sends to Ambisonic bus

✅ Mixing & Plugins

I did the encoding of the prepared material into B-format using automated dynamic spaialization. I also made dynamic correction(limiter).

With plugins you can change and improve the sound as you wish. In audio technology, there are extensions for DAWs, including those that simulate various instruments or add reverb and echo. I used Reaper for this:

✅IEMPlug-in Suite.

✅ambiXv0.2.10 – Ambisonic plug-in suite.

✅ATK_for_Reaper_Mac_1.0.b11.

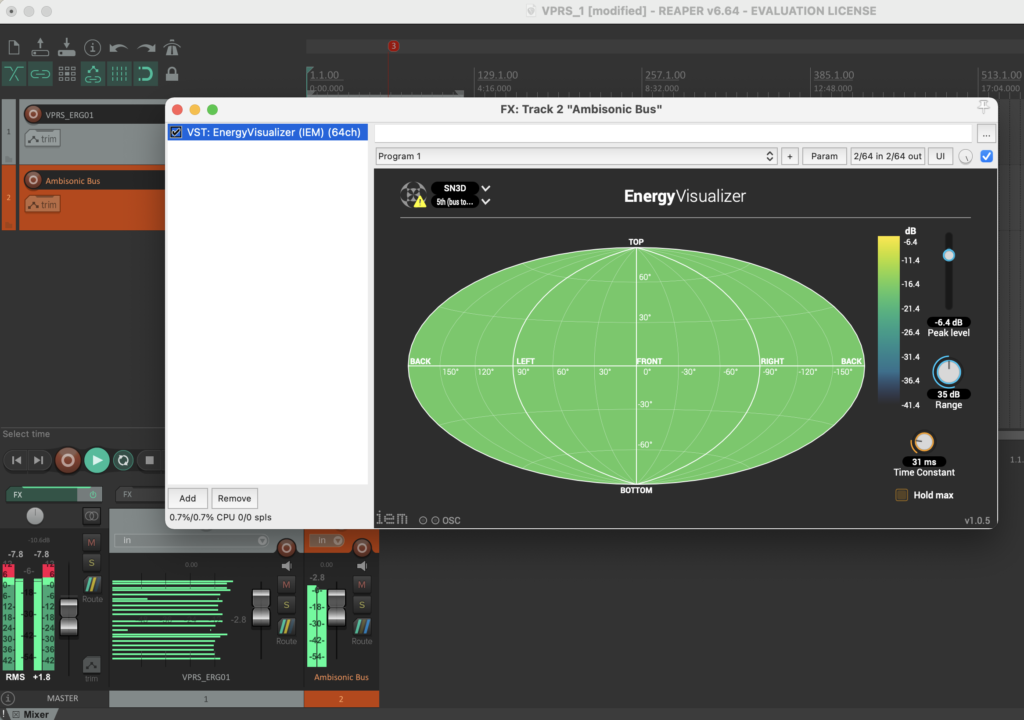

In the Ambisonic Bus track I have(see Fig 11):

✅VST: EnergyVisualizer (IEM) (64ch) added.

Fg. 11 shows Reaper project: Ambisonic Bus VST Plugin (VST: EnergyVisualizer (IEM) (64ch))

Then I redacted with the plug-in EQ(see Fig 12). I have:

✅VST: Multi EQ (IEM) (64ch) added.

Fg. 12 shows Reaper project: track “VPRS project” and VST plugin (VST: Multi EQ (IEM) (64ch))

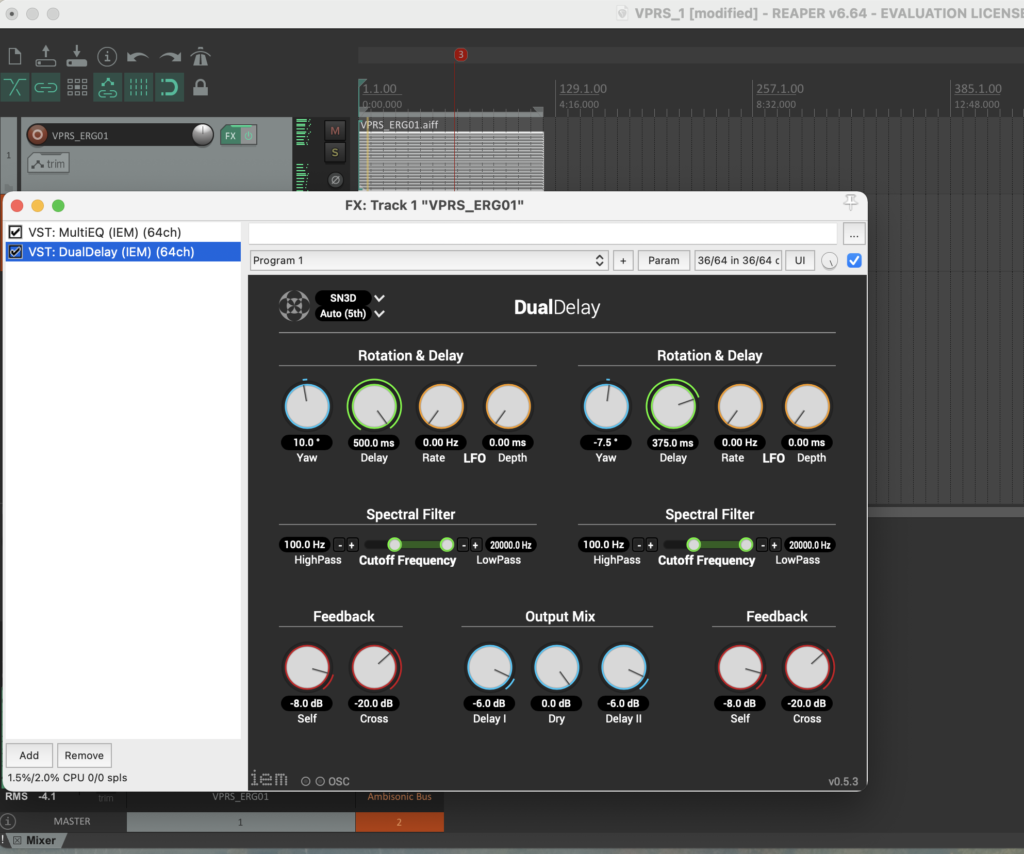

Then I added the DualDelay delay plug-in(see Fig 13).

✅VST: DualDelay (IEM) (64ch).

Fg. 13 shows Reaper project: track “VPRS project” and VST plugin (VST: DualDelay (IEM) (64ch))

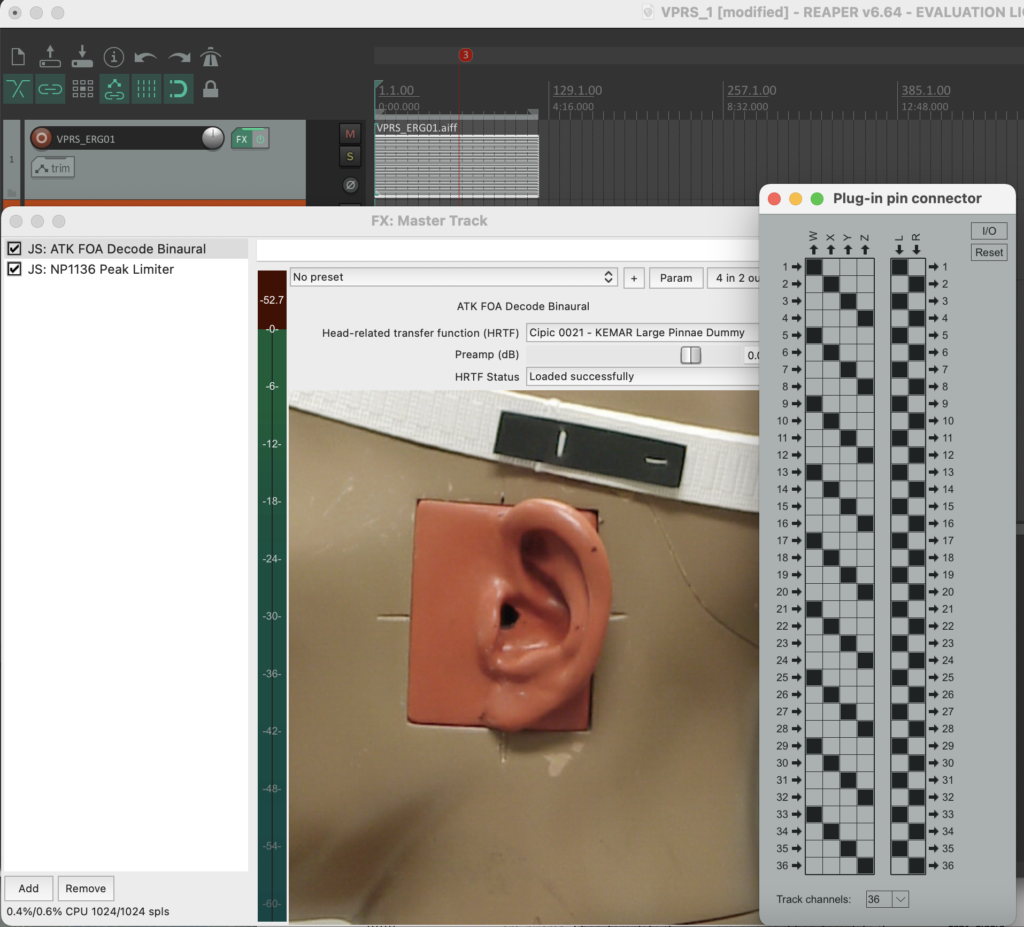

Then I added JS: ATK FOA Decode Binaural to the master track(see Fig 14).

✅JS: ATK FOA Decode Binaural.

Fg. 14 shows Reaper project: Track Master Track with JS: ATK FOA Decode Binaural .

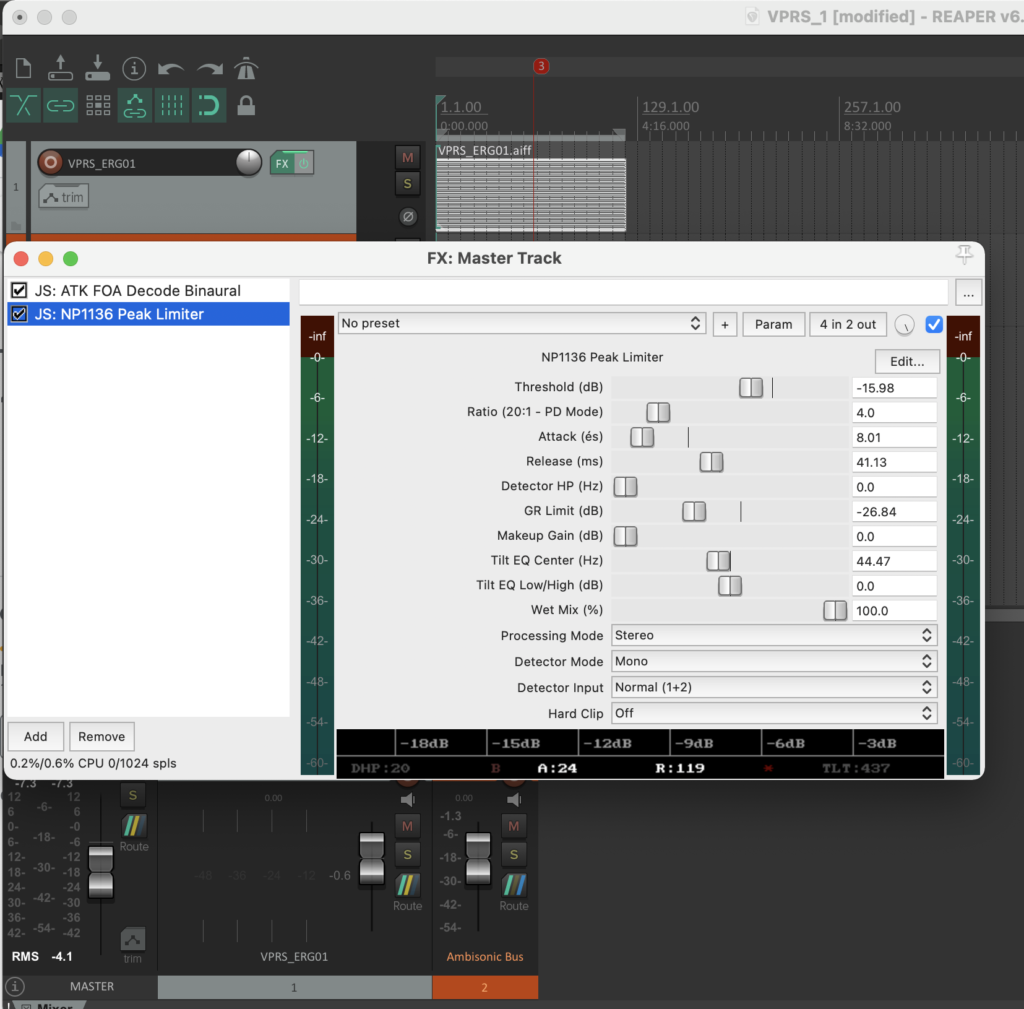

Then I added to the master track with JS: NP1136 Pea Limiter(see Fig 15).

✅JS: ATK FOA Decode Binaural.

Fg. 15 shows Reaper project: Track Master Track with JS: NP1136 Peak Limiter

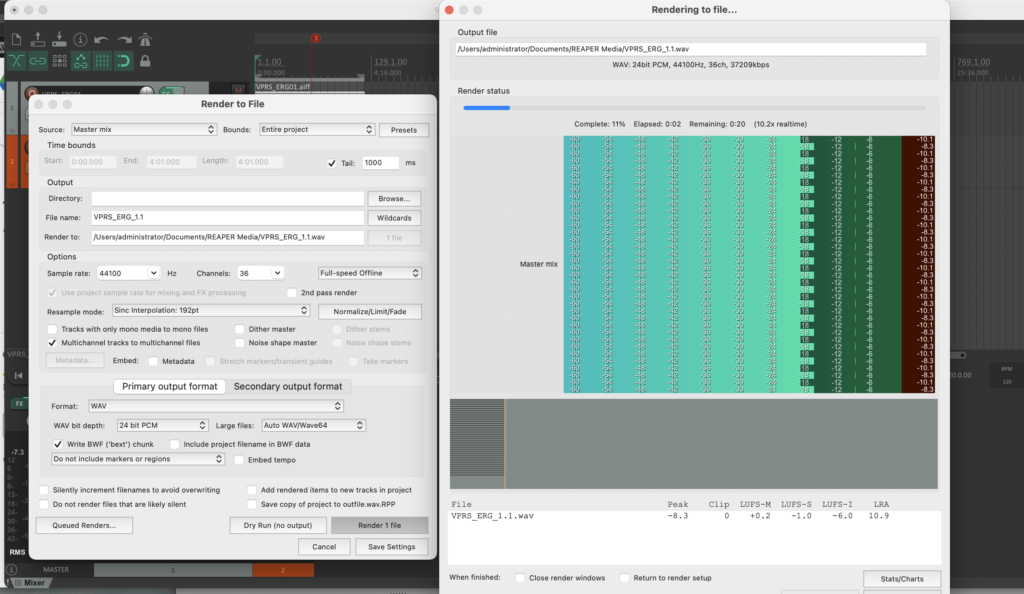

Then I did some rendering(see Fig 16).

✅Renderto File

Fg. 16 shows Reaper project: Render to File

✅ Result Reaper Project can be found ? here? (Audio, Reaper Project, OM Code) downloaded.

About the author