This article is about the three iterations of an acousmatic study by Zeno Lösch, which were carried out as part of the seminar “Symbolic Sound Processing and Analysis/Synthesis” with Prof. Dr. Marlon Schumacher at the HfM Karlsruhe. It deals with the basic conception, ideas, constructive iterations and the technical implementation with OpenMusic.

Responsible persons: Zeno Lösch, Master student Music Informatics at HfM Karlsruhe, 1st semester

Idea and concept

I got my inspiration for this study from the Freeze effect of GRM Tools.

This effect makes it possible to layer a sample and play it back at different speeds at the same time.

With this process you can create independent compositions, sound objects, sound structures and so on.

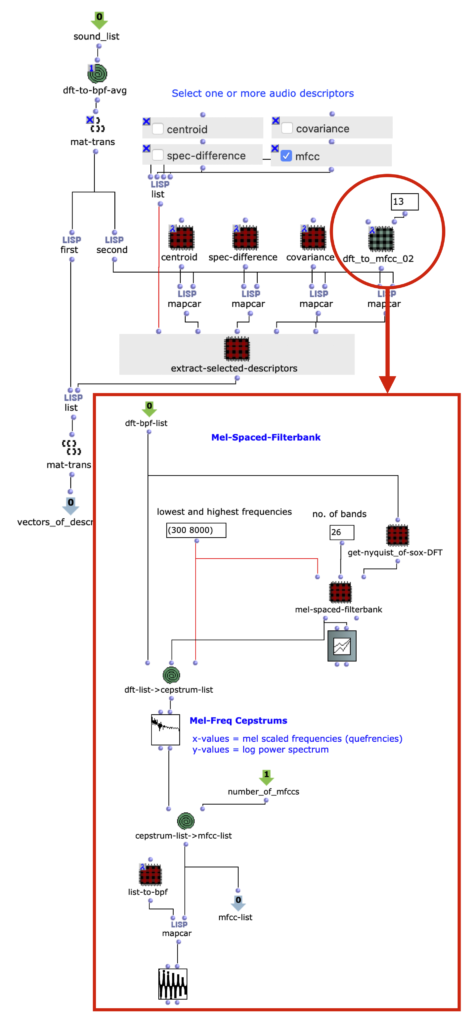

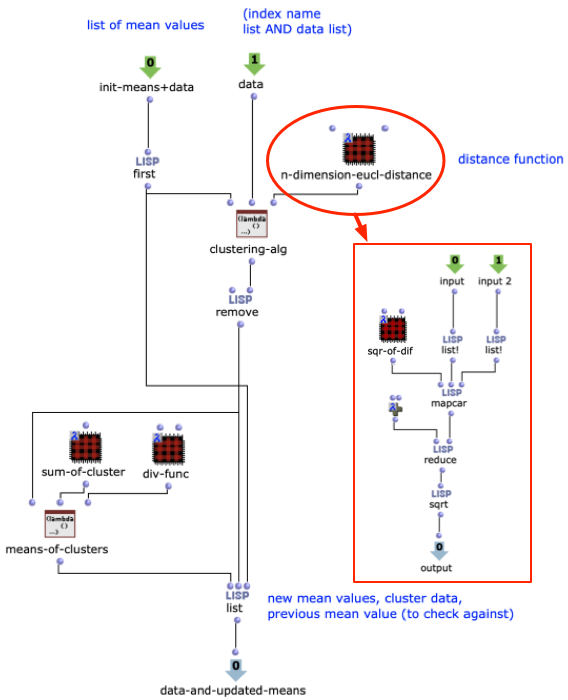

My idea is to program the same with Open Music.

For this I used the maquette and om-loops.

In the OpenMusicPatch you can find the different processes of layering the source material.

The source material is a “filtered” violin. This was created using the cross-synthesis process. This process of the source material was not created in Open Music.

Music cannot exist without time. Our perception connects the different sounds and seeks a connection. In this process, also comparable to rhythm, the individual object is connected to other objects. Digital sound manipulation makes it possible to use processes to create other sounds from one sound, which are related to the same sound.

For example, I present the sound in one form and change it at another point in the composition. This usually creates a connection, provided the listener can understand it.

You can change a transposition or the pitch in a similar way to notes.

This changes the frequency of a note. With digital material, this can lead to very exciting results. On a piano, the overtones of each note are related to the fundamental. These are fixed and cannot be changed with traditional sheet music.

With digital material, the effect that transposes plays a very important role. Depending on the type of effect, I have various possibilities to manipulate the material according to my own rules.

The disadvantage with instruments is that with a violin, for example, the player can only play the note once. Ten times the same note means ten violins.

In OpenMusic it is possible to play the “instrument” any number of times (as long as the computer’s processing power allows it).

Process

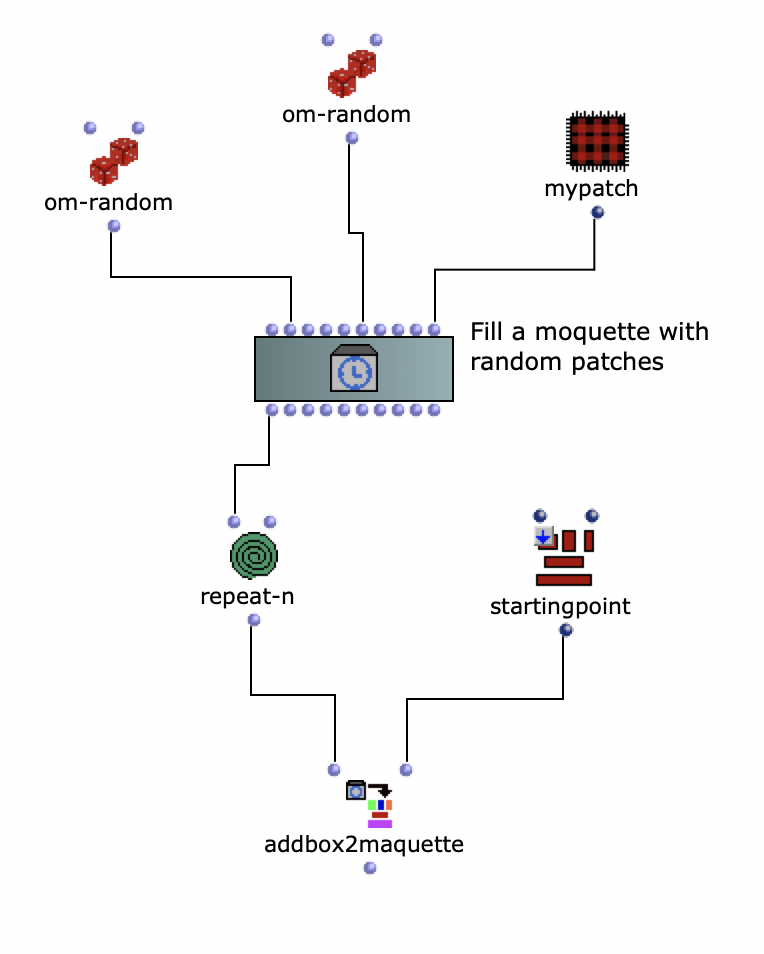

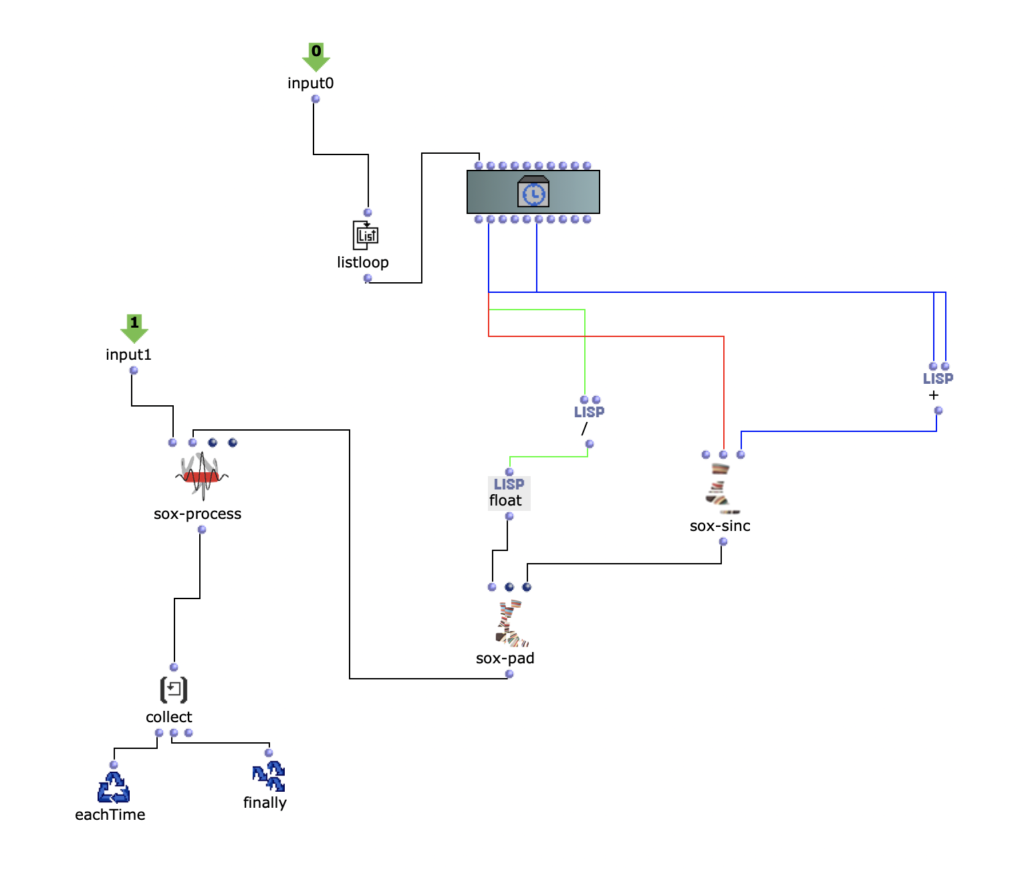

To recreate the Grm-Freeze, a moquette was first filled with empty patches.

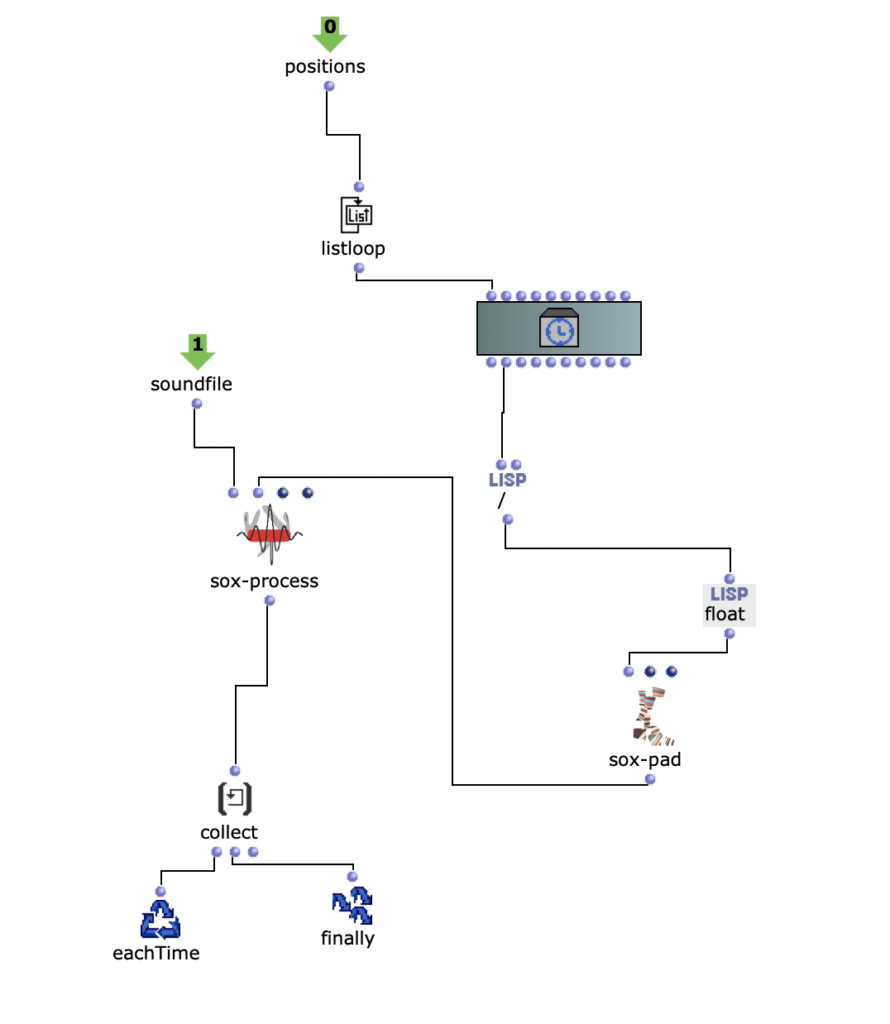

The soundfile was then rendered from the moquette with an om-loop to the positions of the empty patches.

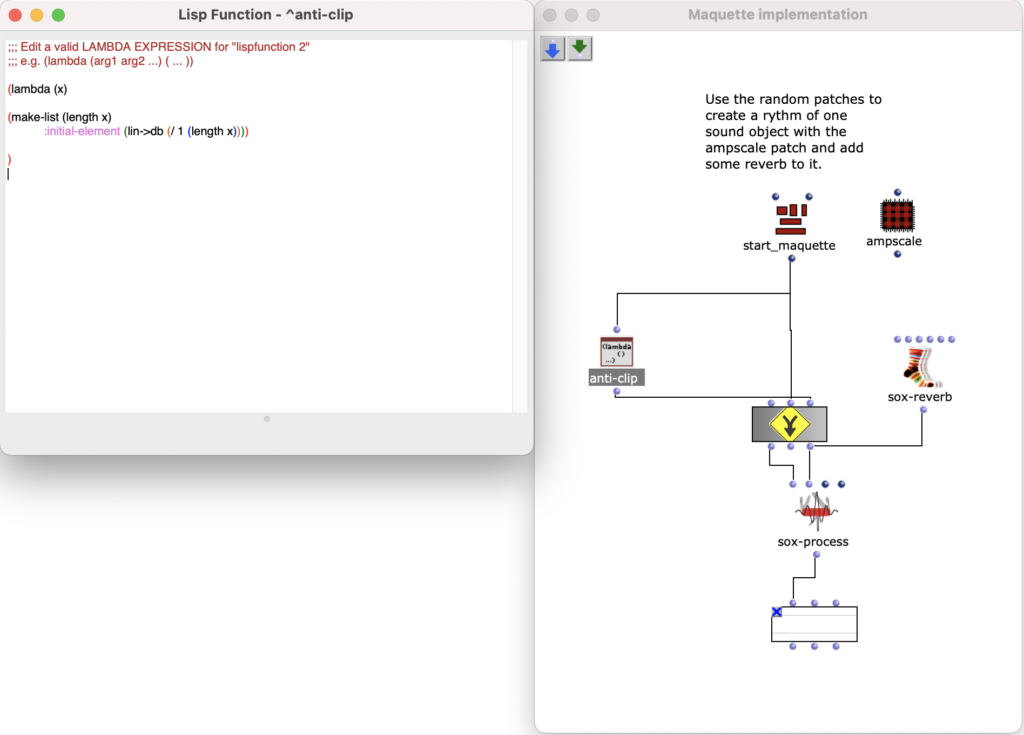

The following code was used to avoid clipping.

Layer Study first iteration

The source material is presented at the beginning. In the course of the study, it is repeatedly changed and stacked in different ways.

The study itself also plays with the dynamics. Depending on the sound stacking algorithm, the dynamics in each sound object are changed. As there is more than one sound in time, these sounds are normalized depending on how many sounds are present in the algorithm to avoid clipping.

The study begins with the source material. This is then presented in a different temporal sequence.

This layer is then filtered and is also quieter. The next one develops into a “reverberant” sound. A continuum. The continuum remains it is presented differently again.

In the penultimate sound, a form of glissandi can be heard, which again ends in a sound that is similar to the second, but louder.

The process of stacking and changing the sound is very similar for each section.

The position is given by the empty patch in the moquette.

Then the y-position and x-position parameters are used for modulation

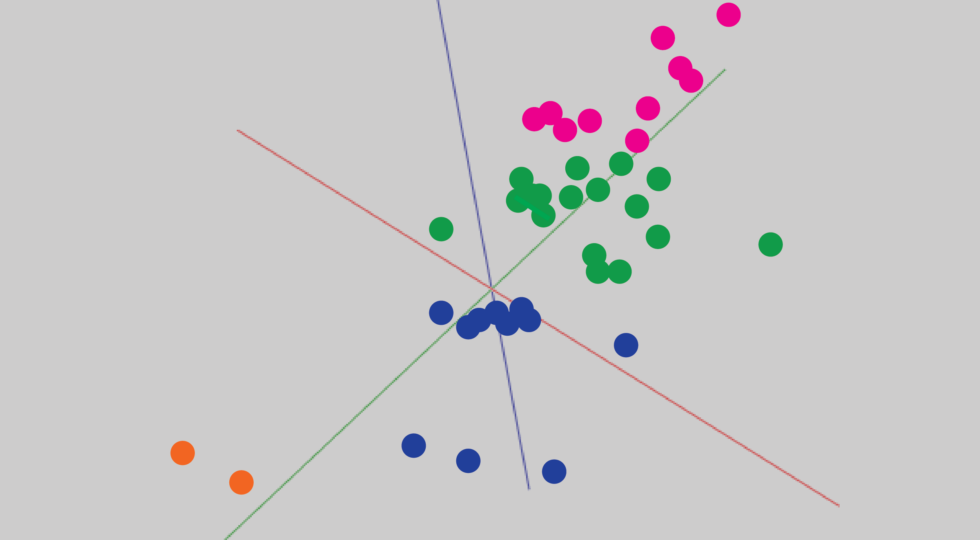

Layer Study second iteration

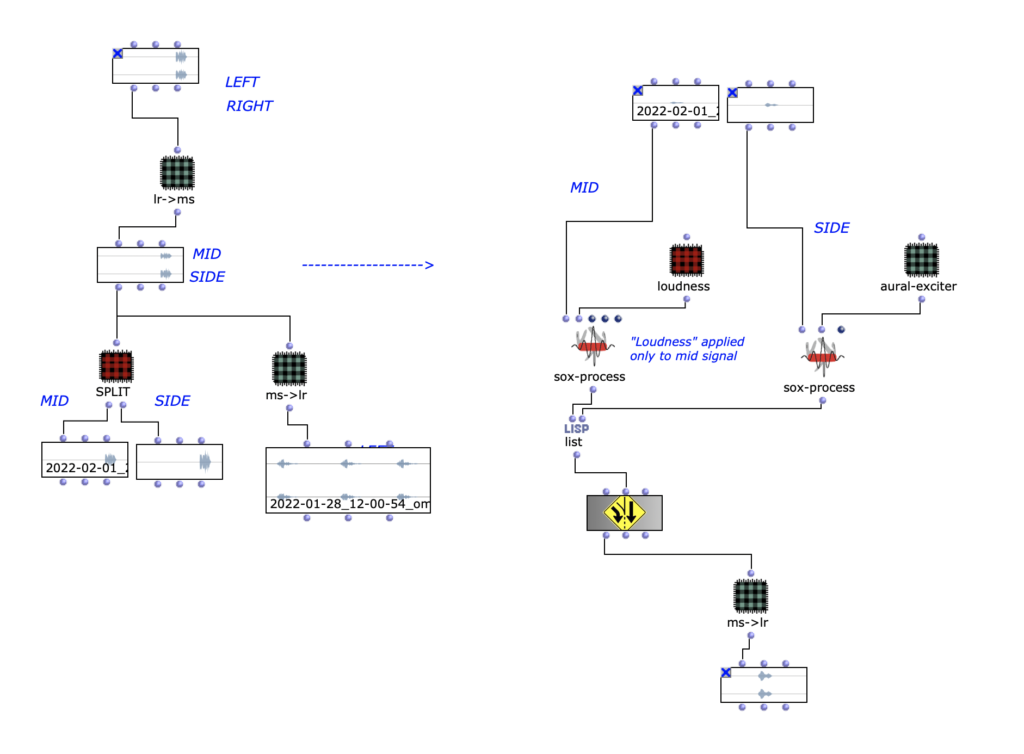

I tried to create a different stereo image for each section.

Different rooms were simulated.

One technique that was used is the mid/side.

In this technique, the mid and side are extracted from a stereo signal using the following process:

Mid = (L R) * 0.5

Side = (L – R) * 0.5

An aural exciter has also been added.

In this process, the signal is filtered with a high-pass filter, distorted and added back to the input signal. This allows better definition to be achieved.

Through the mid/side, the aural exciter is only applied to one of the two and it is perceived as more “defined”.

To return the process to a stereo signal, the following process is used:

L = Mid Side

R = Mid – Side

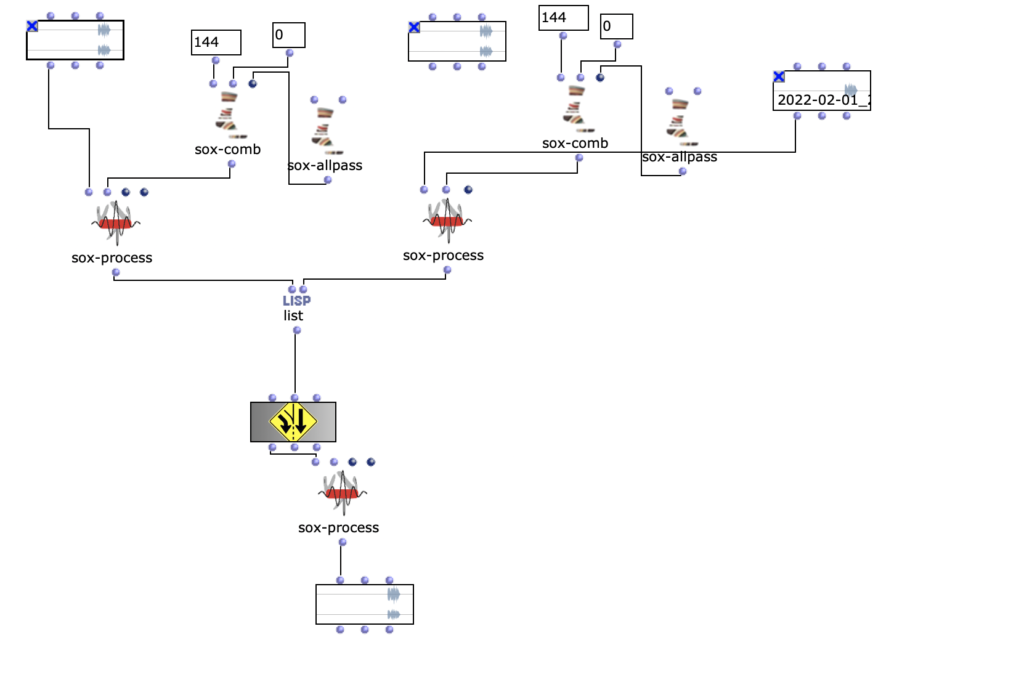

To further spatialize the sound, an all-pass filter and a comb filter were used to change the phase of the mid or side component.

Layer Study third iteration

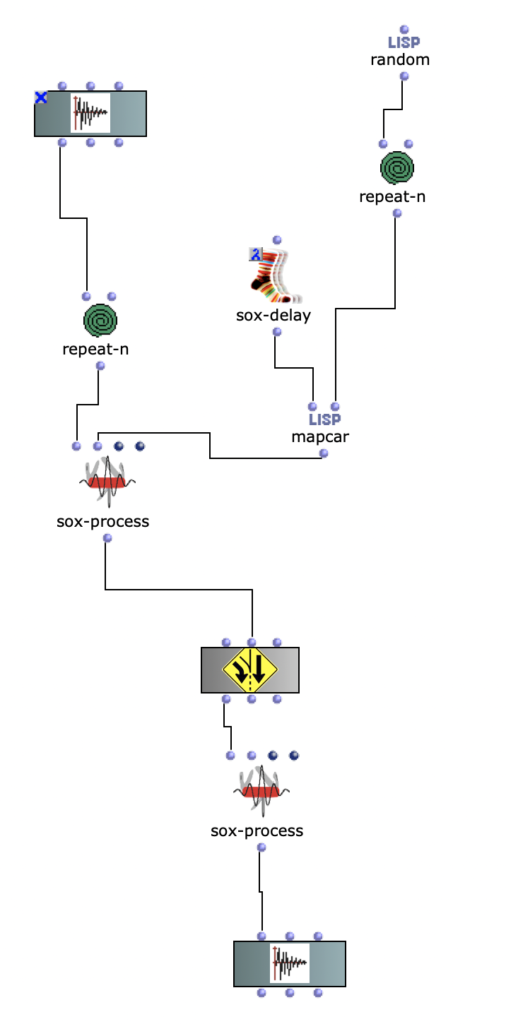

In this iteration, the stereo file was divided into eight speakers.

The different sections of the stereo composition were extracted and different splitting techniques were used.

In one of these, a different fade in and fade out was used for each channel.

In an acousmatic version of a composition, this fade in and fade out can be achieved with the controls of a mixer.

A mapcar and repeat-n were used for this purpose.

The position of the respective channels was changed in the other processes. A delay was used.

The final version is available on 2 channels.