Abstract: Beschreibung des 6DOF Recording Systems der Firma Zylia für HOA und dessen Software, mit Streaming und Binauralix Anwendungen.

Verantwortliche: Prof. Dr. Marlon Schumacher, Eveline Vervliet

Introduction

The Zylia microphone is a 19-capsule microphone array used for 3D/360 audio recording in 3rd ambisonics order. It’s easy to connect to your computer with a USB cable and compact in transportation.

Software

For proper functioning of the Zylia ZM-1, you must install a driver. Download the driver specific for your operating system here.

Zylia 6DoF Recording Application for recording with multiple Zylia microphones

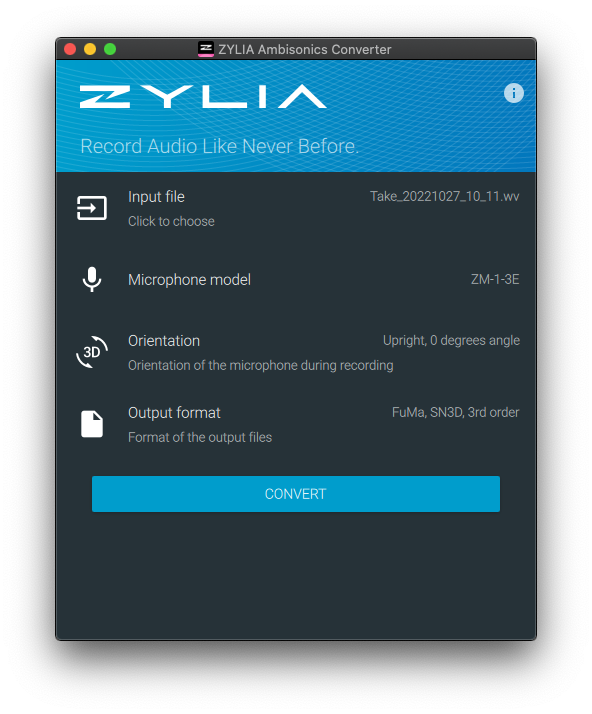

Zylia Ambisonics Converter for converting from A to B format

Zylia Control Panel with some information on the connected microphone

Zylia Streaming Application for setting up your live audio streaming with the Zylia microphone

Zylia Studio for recording with one Zylia microphone

Download the software here. Note that licenses are required.

Workflow

Recording

Recording with the Zylia microphone can be done either in the standalone application Zylia Studio or in a DAW with the Zylia Studio Pro audio plugin. As a DAW, Reaper is most recommended.

Conversion

To use the recordings on other platforms or for applications like videos, the recordings have to be converted to an Ambisonics B-format. You can either use the standalone application or the Zylia Ambisonics Converter plugin.

There are several standards in the ambisonics world related to channel ordering and normalization levels. The most used one is the ambiX standard. For this, you choose the following settings: channel ordering ‚ACN‘ and normalization ‚SN3D‘. The following video from ZYLIA explains the workflow for converting a recording.

Stream on Zoom with the Zylia Microphone

Stream on Zoom with multiple speakers

Download Reaper session template

Use recording with Binauralix + BITalino R-IoT

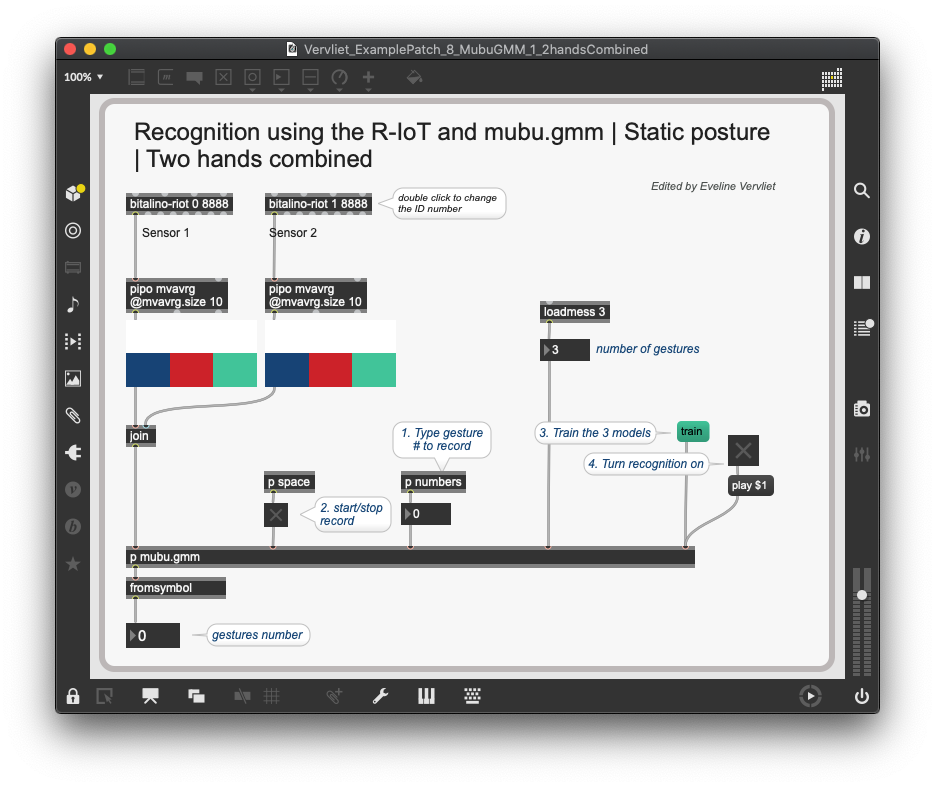

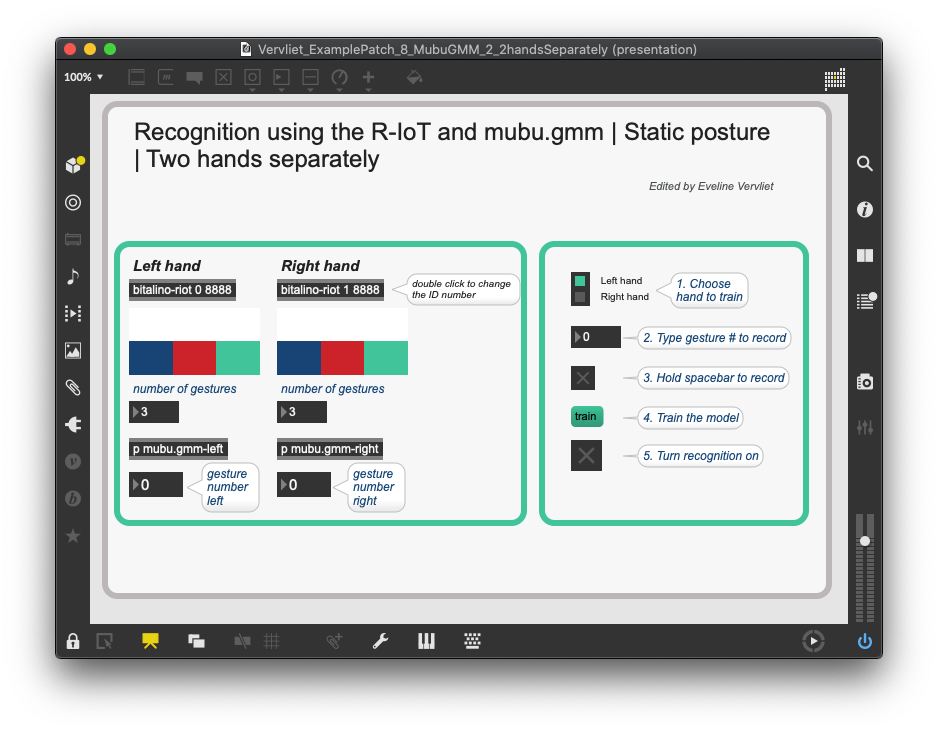

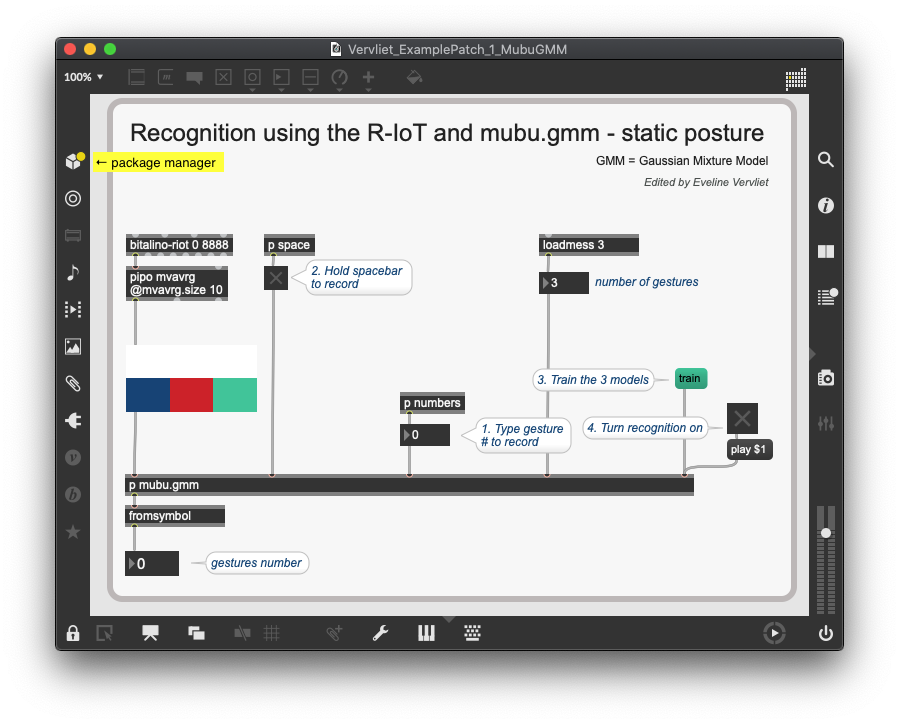

The raw recording from the Zylia microphone will contain of 19 channels. The converted file in B-format in 3rd order will have 16 channels. First encode the B-format in a software like MultiPlayer-mini before integrating it with the open-source software Binauralix.

In the following demonstration video, I open the 3rd order B-format of a Zylia recording in multiplayer mini and send it to Binauralix over Blackhole. The I communicate with Binauralix over OSC in Max. In this way, I can use the BITalino R-IoT sensor to control the listening orientation in Binauralix in real-time.

Read this blog article for more information on the BITalino R-IoT sensor.

Research

The White Paper from Zylia contains the most important information on recording and post-processing with the Zylia microphone. download

In the same folder are two more papers Ambisonics recordings and A-B format conversion.