Abstract: Beschreibung des Inertial Motion Tracking Systems Bitalino R-IoT und dessen Software

Verantwortliche: Prof. Dr. Marlon Schumacher, Eveline Vervliet

Introduction

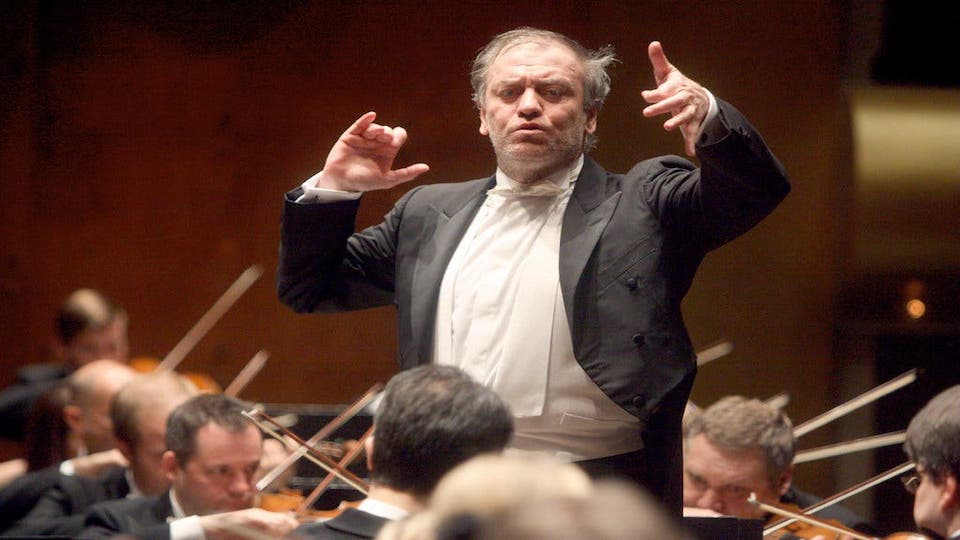

In this blog, I will explain how we can use machine learning techniques to recognize specific conductor gestures sensed via the the BITalino R-IoT platform in Max. The goal of this article is to enable you to create an interactive electronic composition for a conductor in Max.

For more information on the BITalino R-IoT, check the previous blog article.

This project is based on research by Tommi Ilmonen and Tapio Takala. Their article ‚Conductor Following with Artificial Neural Networks‘ can be downloaded here. This article can be an important lead in further development of this project.

Demonstration Patches

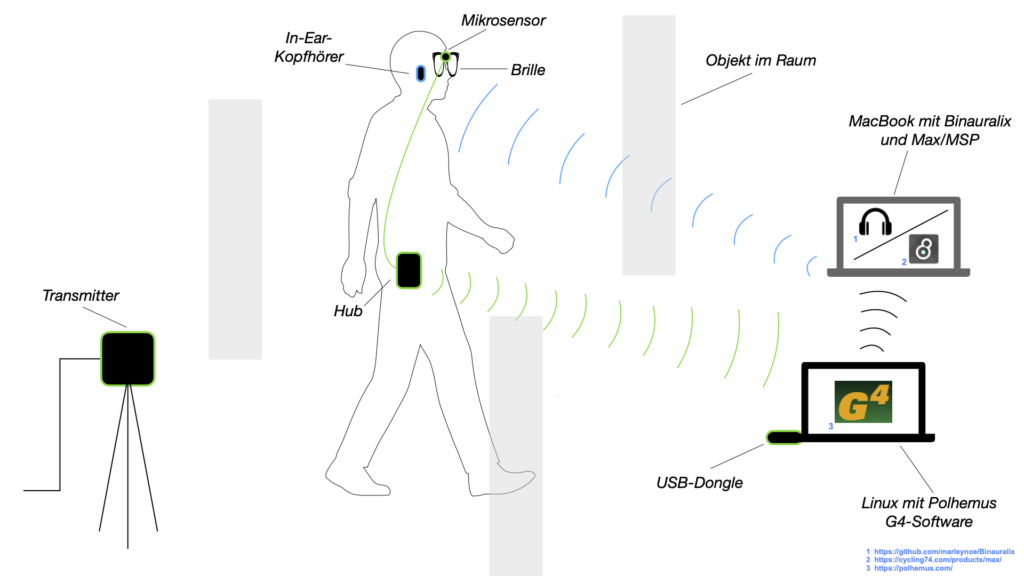

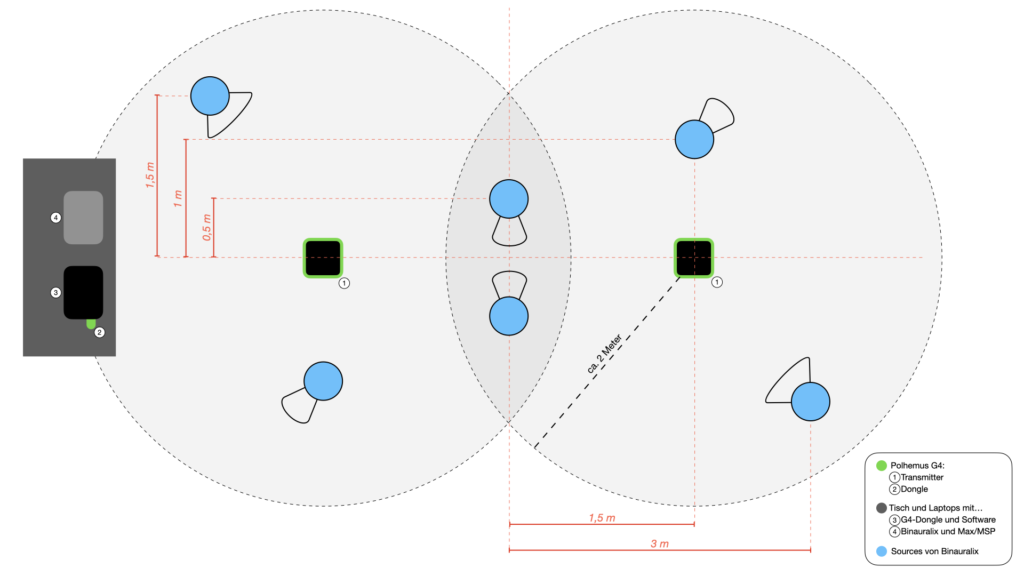

In the following demonstration patches, I have build further on the example patches from the previous blog post, which are based on Ircam’s examples. To detect conductor’s gestures, we need to use two sensors, one for each hand. You then have the choice to train the gestures with both hands combined or to train a model for each hand separately.

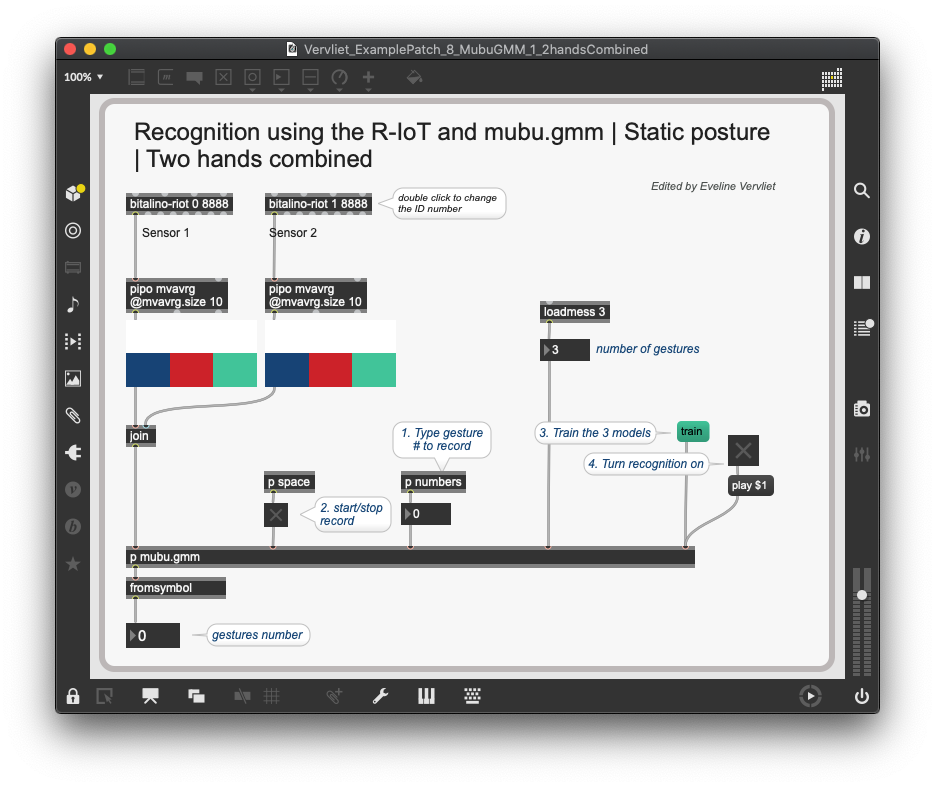

Detect static gestures with 2 hands combined

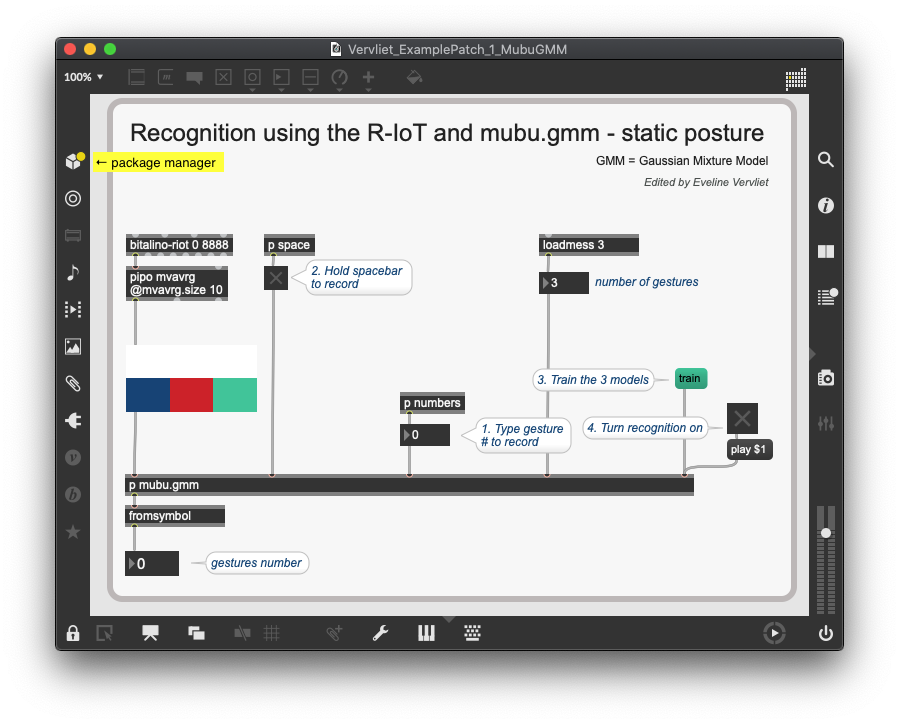

When training both hands combined, there are only a few changes we need to make to the patches for one hand.

First of all, we need a second [bitalino-riot] object. You can double click on the object to change the ID. Most likely, you’ll have chosen sensor 1 with ID 0 and sensor 2 with ID 1. The data from both sensors are joined in one list.

In the [p mubu.gmm] subpatch, you will have to change the @matrixcols parameter of the [mubu.record] object depending on the amount of values in the list. In the example, two accelerometer data lists with each 3 values were joined, thus we need 6 columns.

The rest of the process is exactly the same as in previous patches: we need to record two or more different static postures, train the model, and then click play to start the gesture detection.

Download Max patch with training example

Download training data

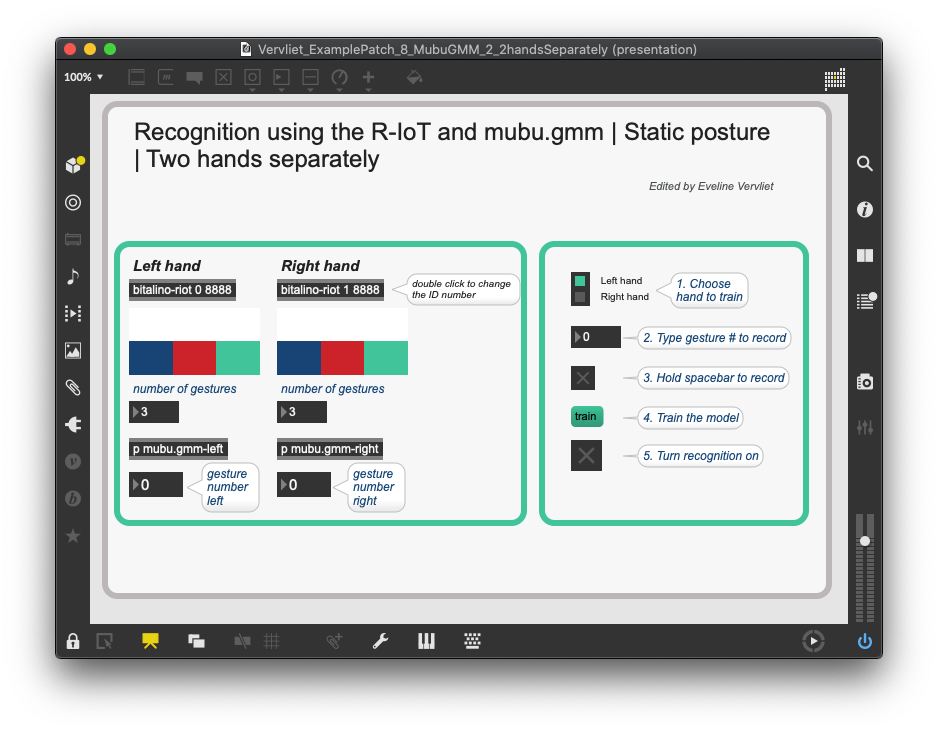

Detect static gestures with 2 hands separately

When training both hands separately, the training process becomes a bit more complex, although most steps remain the same. Now, there is a unique model for each hand, which has to be trained separately. You can see the models in the [p mubu.gmm-left] and [p mubu.gmm-right] subpatches. There is a switch object which routes the training data to the correct model.

Download Max patch with training example

Download training data

In the above example, I personally found the training with both hands separate to be most efficient: even though the training process took slightly longer, the programming after that was much easier. Depending on your situation, you will have to decide which patch makes most sense to use. Experimentation can be a useful tool in determining this.

Detect dynamic gestures with 2 hands

The detection with both hands of dynamic gestures follow the same principles as the above examples. You can download the two Max patches here:

Download Max patch mubu.hhmm with two hands combined

Download Max patch mubu.hhmm with two hands separate

Research

The mentioned tools can be used to detect ancillary gestures in musicians in real-time, which in turn could have an impact on a musical composition or improvisation. Ancillary gestures are „musician’s performance movements which are not directly related to the production or sustain of the sound“ (Lähdeoja et al.) but are believed to have an impact both in the sound production as well as in the perceived performative aspects. Wanderley also refers to this as ‘non-obvious performer gestures’.

In a following article, Marlon Schumacher worked with Wanderley on a framework for integrating gestures in computer-aided composition. The result is the Open Music library OM-Geste. This article is a helpful example of how the data can be used artistically.

Links to articles:

- Marcelo M. Wanderley – Non-obvious Performer Gestures in Instrumental Music download

- O. Lähdeoja, M. M. Wanderley, J. Malloch – Instrument Augmentation using Ancillary Gestures for Subtle Sonic Effects download

- M. Schumacher, M. Wanderley – Integrating gesture data in computer-aided composition: A framework for representation, processing and mapping download

Detecting gestures in musicians has been a much-researched topic in the last decades. This folder holds several other articles on this topic that could interest.

Links to documentation

Demonstration videos and Max patches made by Eveline Vervliet

Max patches by Ircam and other software

The folder with all the assembled information regarding the Bitalino R-IoT sensor can be found here.

This link leads to the official Data Sheet from Bitalino.