Abstract: Beschreibung des Inertial Motion Tracking Systems BITalino R-IoT und dessen Software

Verantwortliche: Prof. Dr. Marlon Schumacher, Eveline Vervliet

Introduction to the BITalino R-IoT sensor

The R-IoT module (IoT stands for Internet of Things) from BITalino includes several sensors to calculate the position and orientation of the board in space. It can be used for an array of artistic applications, most notably for gesture capturing in the performative arts. The sensor’s data is sent over WiFi and can be captured with the OSC protocol.

The R-IoT sensor outputs the following data:

- Accelerometer data (3-axis)

- Gyroscope data (3-axis)

- Magnetometer data (3-axis)

- Temperature of the sensor

- Quaternions (4-axis)

- Euler angles (3-axis)

- Switch button (0/1)

- Battery voltage

- Sampling period

The accelerometer measures the sensor’s acceleration on the x, y and z axis. The gyroscope measures the sensor’s deviation from its ’neutral‘ position. The magnetometer measures the sensor’s relative orientation to the earth’s magnetic field. Euler angles and quaternions measure the rotation of the sensor.

The sensor has been explored and used by the {Sound Music Movement} department of Ircam. They have distributed several example patches to receive and use data from the R-IoT sensor in Max. The example patches mentioned in this article are based on these.

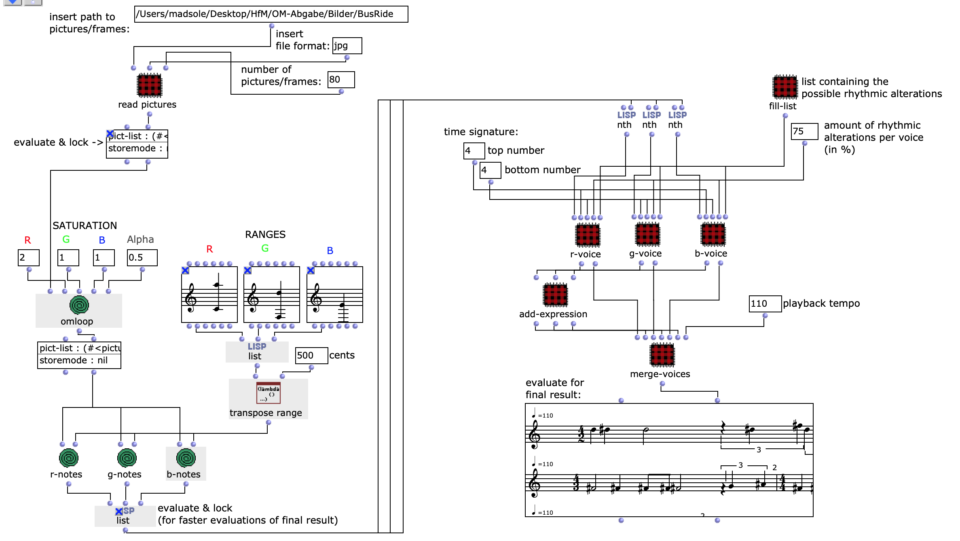

The sensor can be used with all programs that can receive OSC data, like Max and Open Music.

Max patches by Ircam and other software

software/

├ motion-analysis-max-master/

│ ├ max-bitalino-riot/

│ │ ⎿ bitalino-riot-analysis-example.maxpat

│ ├ max-motion-features/

│ │ ├ freefall.maxpat

│ │ ├ intensity.maxpat

│ │ ├ kick.maxpat

│ │ ├ shake.maxpat

│ │ ├ spin.maxpat

│ │ ⎿ still.maxpat

│ ⎿ README.md

Demonstration Videos

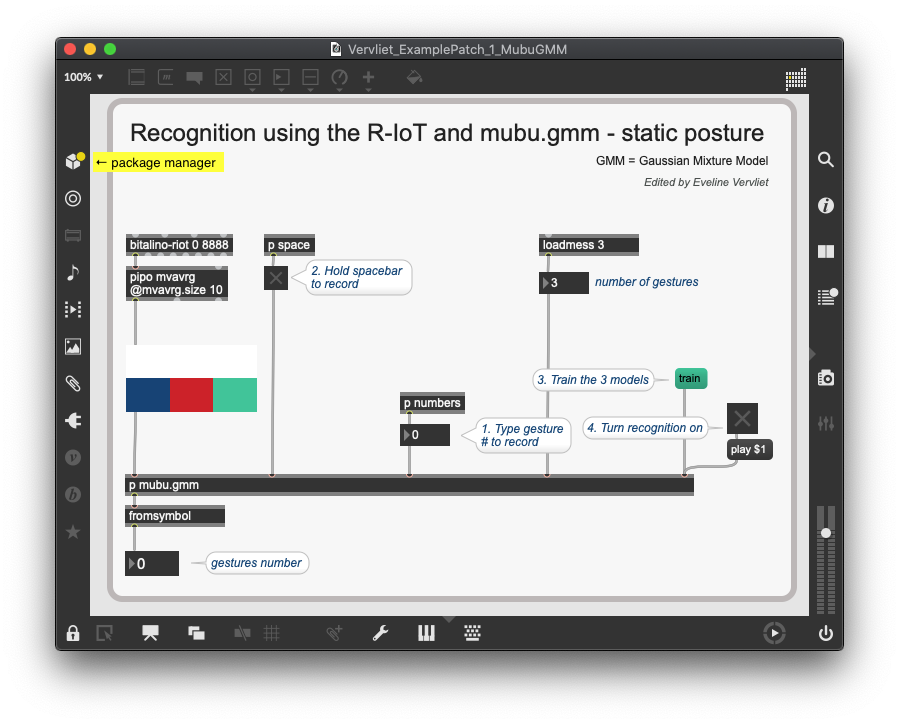

In the following demonstration videos and example patches, we use the Mubu library in Max from Ircam to record gestures with the sensor, visualise the data and train a machine learning algorithm to detect distinct postures. The ‚Mubu for Max‘ library must be downloaded in the max package manager.

Detect static gestures with mubu.gmm

First, we use the GMM (Gaussian mixture model) with the [mubu.gmm] object. This model is used to detect static gestures. We use the accelerometer data to record three different hand postures.

Detect dynamic gestures with mubu.hhmm

The HHMM (hierarchical hidden Markov model) can be used through the [mubu.hhmm] object to detect dynamic (i.e. moving) hand gestures.

Detect dynamic gestures with Mubu Gesture Follower

The Gesture Follower (GF) is a separate tool from the Mubu library that can be used in gesture recognition applications. In the following video, the same movements are trained as in the Mubu.hhmm demonstration so we can easily compare both methods.

Gesture detection and vocalization with Mubu in Max for the Bitalino R-IoT

The [mubu.xmm] object uses hierarchical multimodel hidden Markov models for gesture recognition. In the following demonstration video, gestures and audio is recorded simultaneously. After training, a gesture will trigger its accompanying audio recording. The sound is played back via granular or concatenative synthesis.

Download Max patch with granulator

Download Max patch with concatenative synthesis

Download static training data

Download dynamic training data

Links to documentation

Demonstration videos and Max patches made by Eveline Vervliet

The folder with all the assembled information regarding the Bitalino R-IoT sensor can be found here.

This link leads to the official Data Sheet from Bitalino.

Videos from Ircam

An example of an artistic application from Ircam on YouTube

Über den Autor